Apple Should Own The Term “Warrant Proof”

On March 11, 2016 by Jonathan ZdziarskiThe Department of Justice, in a March 10 filing, accused Apple of outrightly making “warrant proof” devices, and accused Apple of obstruction of justice by making these devices so secure that they could not be searched, even with a warrant. While these words belonged to DOJ, I think Apple should own them. If you study our state laws, federal laws, and international treaties, you’ll see many examples of intellectual property that actually are protected against warrants. Yes, there are things in this country that are deemed warrant proof.

As per The State Department, Article 27.3 of the Vienna Convention on Diplomatic Relations states, a diplomatic pouch “shall not be opened or detained”. In other words, it’s warrant proof. No law enforcement agency in our country is permitted – under international treaty – to open a diplomatic pouch, and any warrants issued are null and void. Guidelines even permit for unaccompanied diplomatic pouches that are traveling without a diplomat or courier, which even further emphasizes the impetus for security of such pouches: they should have locks, and strong ones at that. Do we still have spying? Absolutely, and it’s illegal. It is not only reasonable then, but important to have a device like the iPhone – secure against illegal search and seizure.

An Example of “Warrant-Friendly Security”

On March 11, 2016 by Jonathan ZdziarskiThe encryption on the iPhone is clearly doing its job. Good encryption doesn’t discriminate between attackers, it simply protects data – that’s its job, and it’s frustrating both criminals and law enforcement. The government has recently made arguments insisting that we must find a “balance” between protecting your privacy and providing a method for law enforcement to procure evidence with a warrant. If we don’t, the Department of Justice and the President himself have made it clear that such privacy could easily be legislated out of our products. Some think having a law enforcement backdoor is a good idea. Here, I present an example of what “warrant friendly” security looks like. It already exists. Apple has been using it for some time. It’s integrated into iCloud’s design.

Unlike the desktop backups that your iPhone makes, which can be encrypted with a backup password, the backups sent to iCloud are not encrypted this way. They are absolutely encrypted, but differently, in a way that allows Apple to provide iCloud data to law enforcement with a subpoena. Apple had advertised iCloud as “encrypted” (which is true) and secure. It still does advertise this today, in fact, the same way it has for the past few years:

“Apple takes data security and the privacy of your personal information very seriously. iCloud is built with industry-standard security practices and employs strict policies to protect your data.”

So with all of this security, it sure sounds like your iCloud data should be secure, and also warrant friendly – on the surface, this sounds like a great “balance between privacy and security”. Then, the unthinkable happened.

On Dormant Cyber Pathogens and Unicorns

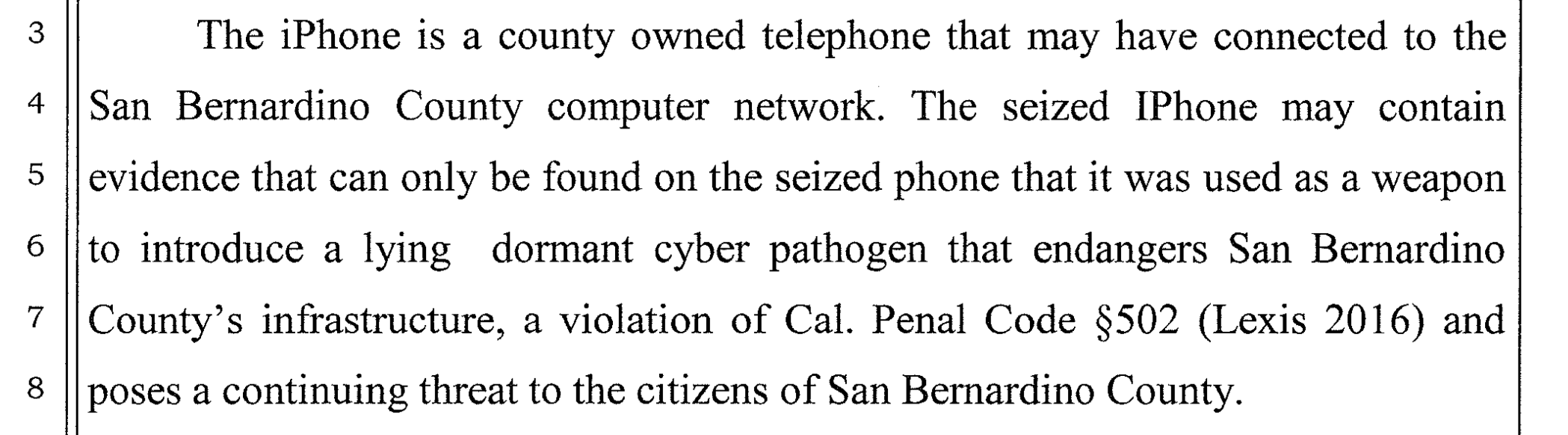

On March 3, 2016 by Jonathan ZdziarskiGary Fagan, the Chief Deputy District Attorney for San Bernardino County, filed an amicus brief to the court in defense of the FBI compelling Apple to backdoor Farook’s iPhone. In this brief, DA Michael Ramos made the outrageous statement that Farook’s phone might contain a “lying dormant cyber pathogen”, a term that doesn’t actually exist in computer science, let alone in information security.

CIS Files Amici Curiae Brief in Apple Case

On March 3, 2016 by Jonathan ZdziarskiCIS sought to file a friend-of-the-court, or “amici curiae,” brief in the case today. We submitted the brief on behalf of a group of experts in iPhone security and applied cryptography: Dino Dai Zovi, Charlie Miller, Bruce Schneier, Prof. Hovav Shacham, Prof. Dan Wallach, Jonathan Zdziarski, and our colleague in CIS’s Crypto Policy Project, Prof. Dan Boneh. CIS is grateful to them for offering up

Mistakes in the San Bernardino Case

On March 2, 2016 by Jonathan ZdziarskiMany sat before Congress yesterday and made their cases for and against a backdoor into the iPhone. Little was said, however, of the mistakes that led us here before Congress in the first place, and many inaccurate statements went unchallenged.

The most notable mistake the media has caught onto has been the blunder of changing the iCloud password on Farook’s account, and Comey acknowledged this mistake before Congress.

“As I understand from the experts, there was a mistake made in that 24 hours after the attack where the [San Bernardino] county at the FBI’s request took steps that made it hard—impossible—later to cause the phone to back up again to the iCloud,”

Comey’s statements appear to be consistent with court documents all suggesting that both Apple and the FBI believed the device would begin backing up to the cloud once it was connected to a known WiFi network. This essentially established that I nterference with evidence ultimately led to the destruction of the trusted relationship between the device and its iCloud account, which prevented evidence from being available. In other words, the mistake of trying to break into the safe caused the safe to lock down in a way that made it more difficult to get evidence out of it

Shoot First, Ask Siri Later

On February 26, 2016 by Jonathan ZdziarskiYou know the old saying, “shoot first, ask questions later”. It refers to the notion that careless law enforcement officers can often be short sighted in solving the problem at hand. It’s impossible to ask questions to a dead person, and if you need answers, that really makes it hard for you if you’ve just shot them. They’ve just blown their only chance of questioning the suspect by failing to take their training and good judgment into account. This same scenario applies to digital evidence. Many law enforcement agencies do not know how to properly handle digital evidence, and end up making mistakes that cause them to effectively kill their one shot of getting the answers they need.

In the case involving Farook’s iPhone, two things went wrong that could have resulted in evidence being lifted off the device.

First, changing the iCloud password prevented the device from being able to push an iCloud backup. As Apple’s engineers were walking FBI through the process of getting the device to start sending data again, it became apparent that the password had been changed (suggesting they may have even seen the device try to authorize on iCloud). If the backup had succeeded, there would be very little, if anything, that could have been gotten off the phone that wouldn’t be in the iCloud backup.

Secondly, and equally damaging to the evidence, was that the device was apparently either shut down or allowed to drain after it was seized. Shutting the device down is a common – but outdated – practice in field operations. Modern device seizure not only requires that the device should be kept powered up, but also to tune all of the protocols leading up to the search and seizure so that it’s done quickly enough to prevent the battery from draining before you even arrive on scene. Letting the device power down effectively shot the suspect dead by removing any chances of doing the following:

Apple’s Burden to Protect or Perpetually Create a “Weapon”

On February 25, 2016 by Jonathan ZdziarskiAs the Apple/FBI dispute continues on, court documents reveal the argument that Apple has been providing forensic services to law enforcement for years without tools being hacked or leaked from Apple. Quite the contrary, information is leaked out of Foxconn all the time, and in fact some of the software and hardware tools used to hack iOS products over the past several years (IP-BOX, Pangu, and so on) have originated in China, where Apple’s manufacturing process takes place. Outside of China, jailbreak after jailbreak has taken advantage of vulnerabilities in iOS, some with the help of tools leaked out of Apple’s HQ in Cupertino. Devices have continually been compromised and even today, Apple’s security response team releases dozens of fixes for vulnerabilities that have been exploited outside of Apple. Setting all of this aside for a moment, however, lets take a look at the more immediate dangers of such statements.

By affirming that Apple can and will protect such a backdoor, Comey’s statement is admitting that Apple will be faced with not only the burden of breathing this forensics backdoor into existence, but must also take perpetual steps to protect it once it’s been created. In other words, the courts are forcing Apple to create what would be considered a weapon under the latest proposed Wassenaar rules, and charging them with the burden of also preventing that weapon from getting out – either the code itself, or the weaknesses that Apple would have to continue allowing to be baked into their products to allow the weapon to work.

On Ribbons and Ribbon Cutters

On February 23, 2016 by Jonathan ZdziarskiWith most non-technical people struggling to make sense of the battle between FBI and Apple, Bill Gates introduced an excellent analogy to explain cryptography to the average non-geek. Gates used the analogy of encryption as a “ribbon around a hard drive”. Good encryption is more like a chastity belt, but since Farook decided to use a weak passcode, I think it’s fair here to call it a ribbon. In any case, lets go with Gates’ ribbon analogy.

Where Gates is wrong is that the courts are not ordering Apple to simply cut the ribbon. In fact, I think there would be more in the tech sector who would support Apple simply breaking the weak password that Farook chose to use if this had been the case. Apple’s encryption is virtually unbreakable when you use a strong alphanumeric passcode, and so by choosing to use a numeric pin, you get what you deserve.

Instead of cutting the ribbon, which would be a much simpler task, the courts are ordering Apple to invent a ribbon cutter – a forensic tool capable of cutting the ribbon for FBI, and is promising to use it on just this one phone. In reality, there’s already a line beginning to form behind Comey should he get his way. NY DA Cy Vance has stated that NYC has 175 iPhones waiting to be unlocked (which translates to roughly 1/10th of 1% of all crime in NYC for an entire year). Documents have also shown DOJ has over a dozen more such requests pending. If the promise of “just this one phone” were authentic, there would be no need to order Apple to make this ribbon cutter; they’d simply tell them to cut the ribbon.

Dumpster Diving in Forensic Science

On February 22, 2016 by Jonathan ZdziarskiRecent speculation has been made about a plan to unlock Farook’s iPhone simply so that they can walk through the evidence right on the device, rather than to forensically image the device, which would provide no information beyond what is already in an iCloud backup. Going through the applications by hand on an iPhone is along the dumpster level of forensic science, and let me explain why.

The device in question appears to have been powered down already, which has frozen the crypto as well as a number of processes on the device. While in this state, the data is inaccessible – but at least it’s in suspended animation. At the moment, the device is incapable of connecting to a WiFi network, running background tasks, or giving third party applications access to their own data for housekeeping. This all changes once the device is unlocked. Now when a pin code is brute forced, the task is actually running from a separate copy of the operating system booted into memory. This creates a sterile environment where the tasks on the device itself don’t start, but allows a platform to break into the device. This is how my own forensics tools used to work on the iPhone, as well as some commercial solutions that later followed my design. The device can be safely brute forced without putting data at risk. Using the phone is a different story.

On FBI’s Interference with iCloud Backups

On February 21, 2016 by Jonathan ZdziarskiIn a letter emailed from FBI Press Relations in the Los Angeles Field Office, the FBI admitted to performing a reckless and forensically unsound password change that they acknowledge interfered with Apple’s attempts to re-connect Farook’s iCloud backup service. In attempting to defend their actions, the following statement was made in order to downplay the loss of potential forensic data:

“Through previous testing, we know that direct data extraction from an iOS device often provides more data than an iCloud backup contains. Even if the password had not been changed and Apple could have turned on the auto-backup and loaded it to the cloud, there might be information on the phoen that would not be accessible without Apple’s assistance as required by the All Writs Act Order, since the iCloud backup does not contain everything on an iPhone.”

This statement implies only one of two possible outcomes:

10 Reasons Farook’s Work Phone Likely Won’t Have Any Evidence

On February 18, 2016 by Jonathan ZdziarskiTen reasons to consider about Farook’s work phone:

Apple, FBI, and the Burden of Forensic Methodology

On February 18, 2016 by Jonathan ZdziarskiRecently, FBI got a court order that compels Apple to create a forensics tool; this tool would let FBI brute force the PIN on a suspect’s device. But lets look at the difference between this and simply bringing a phone to Apple; maybe you’ll start to see the difference of why this is so significant, not to mention underhanded.

First, let me preface this with the fact that I am speaking from my own personal experience both in the courtroom and working in law enforcement forensics circles since around 2008. I’ve testified as an expert in three cases in California, and many others have pleaded out or had other outcomes not requiring my testimony. I’ve spent considerable time training law enforcement agencies around the world specifically in iOS forensics, met LEOs in the middle of the night to work on cases right off of airplanes, gone through the forensics validation process and clearance processes, and dealt with red tape and all the other terrible aspects of forensics that you don’t see on CSI. It was a lot of fun but was also an incredibly sobering experience, as I have not been trained to deal with the evidence (images, voicemail, messages, etc) that I’ve been exposed to like LEOs have; my faith has kept me grounded. I’ve developed an amazing amount of respect for what they do.

For years, the government could come to Apple with a warrant and a phone, and have the manufacturer provide a disk image of the device. This largely worked because Apple didn’t have to hack into their phones to do this. Up until iOS 8, the encryption Apple chose to use in their design was easily reversible when you had code execution on the phone (which Apple does). So all through iOS 7, Apple only needed to insert the key into the safe and provide FBI with a copy of the data.

Code is Law

On February 17, 2016 by Jonathan ZdziarskiFor the first time in Apple’s history, they’ve been forced to think about the reality that an overreaching government can make Apple their own adversary. When we think about computer security, our threat models are almost always without, but rarely ever within. This ultimately reflects through our design, and Apple is no exception. Engineers working on encryption projects are at a particular disadvantage, as the use (or abuse) of their software is becoming gradually more at the mercy of legislation. The functionality of encryption based software boils down to its design: is its privacy enforced through legislation, or is it enforced through code?

My philosophy is that code is law. Code should be the angry curmudgeon that doesn’t even trust its creator, without the end user’s consent. Even at the top, there may be outside factors affecting how code is compromised, and at the end of the day you can’t trust yourself when someone’s got a gun to your head. When the courts can press the creator of code into becoming an adversary against it, there is only ultimately one design conclusion that can be drawn: once the device is provisioned, it should trust no-one; not even its creator, without direct authentication from the end user.

tl;dr technical explanation of #ApplevsFBI

On February 17, 2016 by Jonathan Zdziarski- Apple was recently ordered by a magistrate court to assist the FBI in brute forcing the PIN of a device used by the San Bernardino terrorists.

- The court ordered Apple to develop custom software for the device that would disable a number of security features to make brute forcing possible.

- Part of the court order also instructed Apple to design a system by which pins could be remotely sent to the device, allowing for rapid brute forcing while still giving Apple plausible deniability that they hacked a customer device in a literal sense.

- All of this amounts to the courts compelling Apple to design, develop, and protect a backdoor into iOS devices.

The Politics Behind iPhone Encryption and the FBI

On September 25, 2014 by Jonathan ZdziarskiApple’s new policy about law enforcement is ruffling some feathers with FBI, and has been a point of debate among the rest of us. It has become such because it’s been viewed as just that – a policy – rather than what it really is, which is a design change. With iOS 8, Apple has finally brought their operating system up to what most experts would consider “acceptable security”. My tone here suggests that I’m saying all prior versions of iOS had substandard security – that’s exactly what I’m saying. I’ve been hacking on the phone since they first came out in 2007. Since the iPhone first came out, Apple’s data security has had a dismal track record. Even as recent as iOS 7, Apple’s file system left almost all user data inadequately encrypted (or protected), and often riddled with holes – or even services that dished up your data to anyone who knew how to ask. Today, what you see happening with iOS 8 is a major improvement in security, by employing proper encryption to protect data at rest. Encryption, unlike people, knows no politics. It knows no policy. It doesn’t care if you’re law enforcement, or a criminal. Encryption, when implemented properly, is indiscriminate about who it’s protecting your data from. It just protects it. That is key to security.

Up until iOS 8, Apple’s encryption didn’t adequately protect users because it wasn’t designed properly (in my expert opinion). Apple relied, instead, on the operating system to protect user data, and that allowed law enforcement to force Apple to dump what amounted to almost all of the user data from any device – because it was technically feasible, and there was nobody to stop them from doing it. From iOS 7 and back, the user data stored on the iPhone was not encrypted with a key that was derived from the user’s passcode. Instead, it was protected with a key derived from the device’s hardware… which is as good as having no key at all. Once you booted up any device running iOS 7 or older, much of that user data could be immediately decrypted in memory, allowing Apple to dump it and provide a tidy disk image to the police. Incidentally, it also allowed a number of hackers (including criminals) to read it.

An Example of Forensic Science at its Worst: US v. Brig. Gen. Jeffrey Sinclair

On August 24, 2014 by Jonathan ZdziarskiIn early 2014, I provided material support in what would end up turning around what was, in their own words, the US Army’s biggest case in a generation, and much to the dismay of the prosecution team that brought me in to assist them. In the process, it seems I also prevented what the evidence pointed to as an innocent man, facing 25 years in prison, from becoming a political scapegoat. Strangely, I was not officially contracted on the books, nor was I asked to sign any kind of NDA or exposed to any materials marked classified (nor did I have a clearance at that time), so it seems safe to talk about this, and I think it’s an important case.

While I would have thought other cases like US v. Manning would have been considered more important than this to the Army (and certainly to the public), this case – US v. Brig. Gen. Jeffrey Sinclar with the 18th Airborne Corps – could have seriously affected the Army directly, and in a more severe way. It was during this case that President Obama was doing his usual thing of making strongly worded comments with no real ideas about how to fix anything – this time against sexual abuse in the military. Simultaneously, however, the United States Congress was getting ramped up to vote on a military sexual harassment bill. At stake was a massive power grab from congress that would have resulted in stripping the Army of its authority to prosecute sexual harassment cases and other felonies. The Army maintaining their court martial powers in this area seemed to be the driving cause that made this case vastly more important to them than any other in recent history. At the heart of prosecuting Sinclair was the need to prove that the Army was competent enough to run their own courts. With that came what appeared to be a very strong need to make an example out of someone. I didn’t have a dog in this fight at all, but when the US Army comes asking for your help, of course you want to do what you can to serve your country. I made it clear, however, that I would deliver unbiased findings whether they favored the prosecution or not. After finishing my final reports and looking at all of the evidence, followed by the internal US Army drama that went with it, it became clear that this whole thing had – up until this point – involved too much politics and not enough fair trial.

Why You Should Uninstall Firefox and do Some Soul Searching

On April 3, 2014 by Jonathan ZdziarskiToday, I uninstalled Firefox from my computer. There was no fanfare, or large protest, or media coverage of the event. In fact, I’m sure many have recently sworn off Firefox lately, but unlike the rest of those who did, my reasons had nothing to do with whether I support or don’t support gay marriage, proposition 8, or whatever. Nor did they have anything to do with my opinion on whether Brendan Eich was fit to be CEO, or whether I thought he was anti-gay. In fact, I would have uninstalled Firefox today regardless of what my position is on the gay marriage issue, or any other political issue for that matter. Instead, I uninstalled Firefox today for one simple reason: in the tendering of Eich’s resignation, Mozilla crossed over from a company that had previously taken a neutral, non-participatory approach to politics, to an organization that has demonstrated that it will now make vital business decisions based on the whim of popular opinion. By changing Mozilla’s direction to pander to the political and social pressure ignited by a small subset of activists, Mozilla has now joined the ranks of many large organizations in adopting what once was, and should be considered taboo: lack of corporate neutrality. It doesn’t matter what those positions are, or what the popular opinion is, Mozilla has violated its ethical responsibility to, as an organization, remain neutral to such topics. Unfortunately, this country is now owned by businesses that violate this same ethical responsibility.

Corporations have rapidly stepped up lobbying and funneling money into their favorite political vices over the past decade. This radicalization of corporate America climaxed in 2010, when what was left of the Tillman Act (a law passed in 1907 to restrict corporate campaign contributions), was essentially destroyed, virtually unrestricting the corporate world from holding politicians in their back pocket through financial contributions. Shortly before, and since then, America has seen a massive spike in the amount of public, overt political lobbying – not by people, not by voters, but by faceless organizations (without voting rights). What used to be a filthy act often associated with companies like tobacco manufacturers has now become a standard mechanism for manipulating politics. Starbucks has recently, and very rudely, informed its customers that they don’t want their business if they don’t support gay marriage, or if they are gun owners – in other words, if you don’t agree with the values of the CEO, you aren’t welcome in their public business. This very day, 36 large corporations, including some that have no offices in Oregon, are rallying in support of gay marriage in Oregon. The CEO of Whole Foods has come out publicly in protest of the Affordable Care Act. Regardless of your views on any of these, there’s a bigger problem here: it has now become accepted that corporate America can tell you what to believe.

On Expectation of Privacy

On April 25, 2013 by Jonathan ZdziarskiMany governments (including our own, here in the US) would have its citizens believe that privacy is a switch (that is, you either reasonably expect it, or you don’t). This has been demonstrated in many legal tests, and abused in many circumstances ranging from spying on electronic mail, to drones in our airspace monitoring the movements of private citizens. But privacy doesn’t work like a switch – at least it shouldn’t for a country that recognizes that privacy is an inherent right. In fact, privacy, like other components to security, works in layers. While the legal system might have us believe that privacy is switched off the moment we step outside, the intent of our Constitution’s Fourth Amendment (and our basic right, with or without it hard-coded into the Constitution) suggest otherwise; in fact, the Fourth Amendment was designed in part to protect the citizen in public. If our society can be convinced that privacy is a switch, however, then a government can make the case for flipping off that switch in any circumstance they want. Because no-one can ever practice perfect security, it’s easier for a government to simply draw a line at our front door. The right to privacy in public is one that is being very quickly stripped from our society by politicians and lawyers. Our current legal process for dealing with privacy misses one core component which adds dimension to privacy, and that is scope. Scope of privacy is present in many forms of logic that we naturally express as humans. Everything from computer programs to our natural technique for conveying third grade secrets (by cupping our hands over our mouth) demonstrates that we have a natural expectation of scope in privacy.

On Mental Health: How Medical Privacy Laws Destroyed Dad

On December 18, 2012 by Jonathan ZdziarskiI don’t normally write about such personal topics as family illnesses, but it is my hope that those who have gone through a similarly dark cooridor in their life – whether as a result of government control, or just plain ignorant doctors – would know that they are not alone in such frustrations, and to explain to the general oblivious public and incompetent lawmakers the consequences of their actions.

OnStar Begins Spying On Customers’ GPS Location For Profit?

On September 20, 2011 by Jonathan ZdziarskiI canceled the OnStar subscription on my new GMC vehicle today after receiving an email from the company about their new terms and conditions. While most people, I imagine, would hit the delete button when receiving something as exciting as new terms and conditions, being the nerd sort, I decided to have a personal drooling session and read it instead. I’m glad I did. OnStar’s latest T&C has some very unsettling updates to it, which include the ability to now collect your GPS location information and speed “for any purpose, at any time”. They also have apparently granted themselves the ability to sell this personal information, and other information to third parties, including law enforcement. To add insult to a slap in the face, the company insists they will continue collecting and selling this personal information even after you cancel your service, unless you specifically shut down the data connection to the vehicle after canceling. This could mean that if you buy a used car with OnStar, or even a new one that already has been activated by the dealer, your location and other information may get tracked by OnStar without your knowledge, even if you’ve never done business with OnStar.