Dispelling Confusion and Myths: iOS Proof-of-Concept

On July 25, 2014 by Jonathan ZdziarskiHere’s my iOS Backdoor Proof-of-Concept:

http://youtu.be/z5ymf0UsEuw

When I originally gave my talk, it was to a small room of hackers at a hacker conference with a strong privacy theme. With two hours of content to fit into 45 minutes, I not only had no time to demo a POC, but felt that demonstrating a POC of the personal data you could extract from a locked iOS device might be construed as attempting to embarrass Apple or to be sensationalist. After the talk, I did ask a number of people that I know attended if they felt I was making any accusations or outrageous statements, and they told me no, that I presented the information and left it to the audience to draw conclusions. They also mentioned that I was very careful with my wording, so as not to attempt to alarm people. The paper itself was published in a reputable forensics journal, and was peer-reviewed, edited, and accepted as an academic paper. Both my paper and presentation made some very important security and privacy concerns known, and the last thing I wanted to do was to fuel the fire for conspiracy theorists who would interpret my talk as an accusation that Apple is working with NSA. The fact is, I’ve never said Apple was conspiring secretly with any government agency – that’s what some journalists have concluded, and with no evidence mind you. Apple might be, sure, but then again they also might not be. What I do know is that there are a number of laws requiring compliance with customer data, and that Apple has a very clearly defined public law enforcement process for extracting much the same data off of passcode-locked iPhones as the mechanisms I’ve discussed do. In this context, what I deem backdoors (which Apple claims are for their own use), attack points, and so on become – yes suspicious – but more importantly abuse-prone, and can and have been used by government agencies to acquire data from devices that they otherwise wouldn’t be able to access with forensics software. As this deals with our private data, this should all be very open to public scrutiny – but some of these mechanisms had never been disclosed by Apple until after my talk.

Apple Confirms “Backdoors”; Downplays Their Severity

On July 23, 2014 by Jonathan ZdziarskiApple responded to allegations of hidden services running on iOS devices with this knowledge base article. In it, they outlined three of the big services that I outlined in my talk. So again, Apple has, in a traditional sense, admitted to having backdoors on the device specifically for their own use.

A backdoor simply means that it’s an undisclosed mechanism that bypasses some of the front end security to make access easier for whoever it was designed for (OWASP has a great presentation on backdoors, where they are defined like this). It’s an engineering term, not a Hollywood term. In the case of file relay (the biggest undisclosed service I’ve been barking about), backup encryption is being bypassed, as well as basic file system and sandbox permissions, and a separate interface is there to simply copy a number of different classes of files off the device upon request; something that iTunes (and end users) never even touch. In other words, this is completely separate from the normal interfaces on the device that end users talk to through iTunes or even Xcode. Some of the data Apple can get is data the user can’t even get off the device, such as the user’s photo album that’s synced from a desktop, screenshots of the user’s activity, geolocation data, and other privileged personal information that the device even protects from its own users from accessing. This weakens privacy by completely bypassing the end user backup encryption that consumers rely on to protect their data, and also gives the customer a false sense of security, believing their personal data is going to be encrypted if it ever comes off the device.

Apple Responds

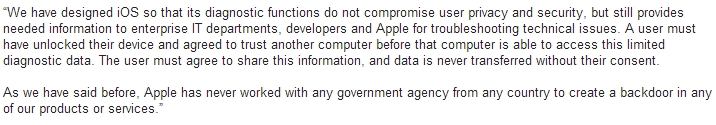

On July 21, 2014 by Jonathan ZdziarskiIn a response from Apple PR to journalists about my HOPE/X talk, it looks like Apple might have inadvertently admitted that, in the most widely accepted sense of the word, they do indeed have backdoors in iOS, however claim that the purpose is for “diagnostics” and “enterprise”.

The problem with this is that these services dish out data (and bypass backup encryption) regardless of whether or not “Send Diagnostic Data to Apple” is turned on or off, and whether or not the device is managed by an enterprise policy of any kind. So if these services were intended for such purposes, you’d think they’d only work if the device was managed/supervised or if the user had enabled diagnostic mode. Unfortunately this isn’t the case and there is no way to disable these mechanisms. As a result, every single device has these features enabled and there’s no way to turn them off, nor are users prompted for consent to send this kind of personal data off the device.

Slides from my HOPE/X Talk

On July 18, 2014 by Jonathan ZdziarskiIdentifying Backdoors, Attack Points, and Surveillance Mechanisms in iOS Devices In addition to the slides, you may be interested in the journal paper published in the International Journal of Digital Forensics and Incident Response. Please note: they charge a small fee for all copies of their journal papers; I don’t actually make anything off of

My Talk at HOPE X

On June 9, 2014 by Jonathan ZdziarskiI’ll be speaking at the HOPE (Hackers on Planet Earth) big X conference this year, July 18-20 in NYC. Below is a summary of my talk. It’s based on this published journal paper. Hope to see you there! Identifying Back Doors, Attack Points, and Surveillance Mechanisms in iOS Devices The iOS operating system has long

TrueCrypt.org May be Compromised

On May 28, 2014 by Jonathan ZdziarskiToday, a new version of TrueCrypt (7.2) was pushed to SourceForge, and the TrueCrypt.org website was replaced with an incredibly suspicious page recommending users cease all use of TrueCrypt and use tools such as Bitlocker. The TrueCrypt maintainers have not officially (as of the time of this writing) commented yet on whether the site is compromised, or whether they are (more unlikely) scuttling the project for reasons unknown.

There have been a number of conspiracy theories ranging from a warrant canary (someone tipping off the TrueCrypt team that a secret warrant was issued for information about them) to a massive website compromise, and finally to a terribly sloppy and unprofessional true exit from TrueCrypt.

My take? I don’t know, but most agree it is very suspicious that the TrueCrypt team would lead anyone to use private, proprietary software like BitLocker, when there are plenty of FOSS implementations out there that work well. Usually when someone is lying under duress (or even trolling), one natural way to tip everyone else off to that fact is to state something completely unbelievable that other people would see is completely unbelievable. The TC team recommending BitLocker fits that bill, and I think leaves a hint to the public to disregard everything they’re saying about TC. The whole thing smells suspicious, and at the very least, should be approached with caution.

One thing is for certain: You should not download or trust anything from TrueCrypt until this is all sorted out. That doesn’t mean, however, that you should stop using TrueCrypt if you already are.

Here are a few steps on what you should do, however, to protect your content:

A Major Supreme Court Ruling on its Way

On May 3, 2014 by Jonathan ZdziarskiI recently gave an interview with Forbes discussing the technical implications of a case recently heard by the Supreme Court about warrantless mobile phone searches. The technical reasons for not allowing this to go on are many, including the most severe penalty of potentially destroying evidence that you would otherwise need to prosecute a case (should the suspect be found to have committed a crime). There is a far more important dimension to this SCOTUS case, however; the ruling to come could potentially change the face of our constitutional rights as it pertains to data.

Why You Should Uninstall Firefox and do Some Soul Searching

On April 3, 2014 by Jonathan ZdziarskiToday, I uninstalled Firefox from my computer. There was no fanfare, or large protest, or media coverage of the event. In fact, I’m sure many have recently sworn off Firefox lately, but unlike the rest of those who did, my reasons had nothing to do with whether I support or don’t support gay marriage, proposition 8, or whatever. Nor did they have anything to do with my opinion on whether Brendan Eich was fit to be CEO, or whether I thought he was anti-gay. In fact, I would have uninstalled Firefox today regardless of what my position is on the gay marriage issue, or any other political issue for that matter. Instead, I uninstalled Firefox today for one simple reason: in the tendering of Eich’s resignation, Mozilla crossed over from a company that had previously taken a neutral, non-participatory approach to politics, to an organization that has demonstrated that it will now make vital business decisions based on the whim of popular opinion. By changing Mozilla’s direction to pander to the political and social pressure ignited by a small subset of activists, Mozilla has now joined the ranks of many large organizations in adopting what once was, and should be considered taboo: lack of corporate neutrality. It doesn’t matter what those positions are, or what the popular opinion is, Mozilla has violated its ethical responsibility to, as an organization, remain neutral to such topics. Unfortunately, this country is now owned by businesses that violate this same ethical responsibility.

Corporations have rapidly stepped up lobbying and funneling money into their favorite political vices over the past decade. This radicalization of corporate America climaxed in 2010, when what was left of the Tillman Act (a law passed in 1907 to restrict corporate campaign contributions), was essentially destroyed, virtually unrestricting the corporate world from holding politicians in their back pocket through financial contributions. Shortly before, and since then, America has seen a massive spike in the amount of public, overt political lobbying – not by people, not by voters, but by faceless organizations (without voting rights). What used to be a filthy act often associated with companies like tobacco manufacturers has now become a standard mechanism for manipulating politics. Starbucks has recently, and very rudely, informed its customers that they don’t want their business if they don’t support gay marriage, or if they are gun owners – in other words, if you don’t agree with the values of the CEO, you aren’t welcome in their public business. This very day, 36 large corporations, including some that have no offices in Oregon, are rallying in support of gay marriage in Oregon. The CEO of Whole Foods has come out publicly in protest of the Affordable Care Act. Regardless of your views on any of these, there’s a bigger problem here: it has now become accepted that corporate America can tell you what to believe.

The Importance of Forensic Tools Validation

On March 28, 2014 by Jonathan ZdziarskiI recently finished consulting on a rather high profile case, and once again found myself spending almost as much time correcting reports from third party forensic tools vendors as I did analyzing actual evidence. It’s even sadder that I charged less for my services than these tools manufacturers charge for a single license of their buggy software. I don’t say high profile to sound important, I say it because these types of cases are generally of great importance themselves, and you absolutely need the evidence to be accurate. Many in the law enforcement community have learned to “trust the tools”, citing scientific method and all that. The problem I’ve found throughout my entire career in forensics, however, has shown me quite the opposite. When it comes to forensic software, the judge should not automatically trust the forensic tools as part of the scientific process, and neither should the forensic examiners using them. Let me explain why…

In forensics, we often misplace our trust in tools that, unlike tried and true scientific methods, are usually closed source. While true scientific process relies on making our findings repeatable and verifiable, the methods to analyze data are sometimes patented, and almost always considered trade secrets. This is the complete opposite of the scientific method, where methods are fully explained and documented. In the software industry, repeatable is exactly what you don’t want your methods to be – especially by your competitors. The nature of secrecy in the software industry doesn’t rub well against the open scientific nature that you’d expect to find in forensic, or other scientific disciplines.As such, “software” is not scientific in nature, and should not be trusted using the same rules as science. Sure, we have some validation experts out there. NIST does a good job of validating logical data acquired from a number of devices and has struck some good and interesting results that have helped the industry. Even still, such tests are only a single data point on an ever evolving software manufacturing process riddled with regression bugs and programming errors that only show up in certain specific data sets.

Recovering Photos From Bad Storage Cards

On March 17, 2014 by Jonathan ZdziarskiA Guide for Photogaphers, Not Geeks

Most photographers have had at least one heart attack moment when they realize all of the photos they’ve taken on a shoot (or a vacation) are suddenly gone, and there’s nothing on the camera’s storage card. Perhaps you’ve accidentally formatted the wrong card, or the card just somehow got damaged. If you’re a professional photographer, there’s a good chance your’e also not a forensic scientist or a hard-core nerd (although it’s OK to be all three!). That minor detail doesn’t mean, however, that you can’t learn to carve data off of a bad storage card and save yourself a lot of money on data recovery. While there are many aspects to forensic science that are extremely complicated, data carving isn’t one of them, and I’ll even walk you through how to do it on your Mac in this article, with a little bit of open source software and a few commands. If you’re scared of your computer, don’t worry. This is all very easy even though it looks a bit intimidating at first. You can test your skills using any old storage card you might have on hand. It doesn’t have to be damaged, although you might be surprised just how much data you thought was deleted from it!

First, lets talk about how your storage card works. When you plug your storage card into your computer, your computer looks for a list of files on the card; this is kind of like a rolodex of all the files your camera has stored. This “catalog” basically says, “OK, this file is this big, and it starts here”. You can think of it like the table of contents of a book. When you format a storage card, most of the time it’s just this table of contents that gets deleted; the actual bits and bytes from the photo you took aren’t erased (because that would take too long). The same can be true when the file system becomes damaged; in most cases, it’s just the file listing that gets blown up somehow, making it appear like there are no files on the card. In more extreme cases, physical damage can sometimes damage the data from one part of the card, but the data for the other half of the card can still be recovered; your computer needs to be told to look past all the damaged data, instead of just giving you an error message.

Journal Paper Published

On March 12, 2014 by Jonathan ZdziarskiThe International Journal of Digital Forensics and Incident Response has formally accepted and published my paper titled Identifying Back Doors, Attack Points, and Surveillance Mechanisms in iOS Devices. This paper is a compendium of services and mechanisms used by many law enforcement agencies and in open source, of modern forensic techniques to create a forensic

Why Not To Shoot at f/22 Anymore

On January 30, 2014 by Jonathan ZdziarskiEvery “professional” photography book I’ve read makes it gospel that you have to shoot landscapes at f/22, in order to ensure that the foreground and background is in focus. Special thanks to these guys for teaching millions of photographers to create blurry photos. Lens Diffraction, and an explanation as to why shooting at f/22 (and

How to Tolerate DxO by Hacking MakerNotes and EXIF Tags

On January 22, 2014 by Jonathan ZdziarskiDxO Optics Pro was a purchase I immediately regretted making, once I realized that it intentionally restricts you from selecting what lens optics you’d like to adjust your photo with. It would take all of five minutes of programming to let the user decide, but for whatever stupid reason, if you’re using a different lens than the one they support OR if you are looking to adjust a photo that you’ve already adjusted in a different program, DxO becomes relatively useless.

I’ve figured out a couple easy ways to hack the tags in a raw image file to “fake” a different kind of lens. This worked for me. I make no guarantees it will work for you. In my case, I have a Canon 8-15mm Fisheye, which isn’t supported by DxO. The fixed 15mm Fisheye is, however, and since I only ever shoot at 15mm, I’d like to use the fixed module to correct. As it turns out, the module does a decent job once you fake DxO into thinking you actually used that lens.

Forensics Tools: Stop Miscalculating iOS Usage Analytics!

On January 22, 2014 by Jonathan ZdziarskiThere are some great forensics tools out there… and also some really crummy ones. I’ve found an incredible amount of wrong information in the often 500+ page reports some of these tools crank out, and often times the accuracy of the data is critical to one of the cases I’m assisting with at the time. Tools validation is critical to the healthy development cycle of a forensics tool, and unfortunately many companies don’t do enough of it. If investigators aren’t doing their homework to validate the information (and subsequently provide feedback to the software manufacturer), the consequences could mean an innocent person goes to jail, or a guilty one goes free. No joke. This could have happened a number of times had I not caught certain details.

Today’s reporting fail is with regards to the application “usage” information stored in iOS in the ADDataStore.sqlitedb file. At least a couple forensics tools are misreporting this data so as to be up to 26 hours or more off.

Thoughts on iMessage Integrity

On October 19, 2013 by Jonathan ZdziarskiRecently, Quarkslab exposed design flaws[1] in Apple’s iMessage protocol demonstrating that Apple does, despite its vehement denial, have the technical capability to intercept private iMessage traffic if they so desired, or were coerced to under a court order. The iMessage protocol is touted to use end-to-end encryption, however Quarkslab revealed in their research that the asymmetric keys generated to perform this encryption are exchanged through key directory servers centrally managed by Apple, which allow for substitute keys to be injected to allow eavesdropping to be performed. Similarly, the group revealed that certificate pinning, a very common and easy-to-implement certificate chain security mechanism, was not implemented in iMessage, potentially allowing malicious parties to perform MiTM attacks against iMessage in the same fashion. While the Quarkslab demonstration required physical access to the device in order to load a managed configuration, a MiTM is also theoretically possible by any party capable of either forging, or ordering the forgery of a certificate through one of the many certificate authorities built into the iOS TrustStore, either through a compromised certificate authority, or by court order. A number of such abuses have recently plagued the industry, and made national news[2, 3, 4].

Fingerprint Reader / PIN Bypass for Enterprises Built Into iOS 7

On September 18, 2013 by Jonathan ZdziarskiWith iOS 7 and the new 5s come a few new security mechanisms, including a snazzy fingerprint reader and a built-in “trust” mechanism to help prevent juice jacking. Most people aren’t aware, however, that with so much new consumer security also come new bypasses in order to give enterprises access to corporate devices. These are in your phone’s firmware, whether it’s company owned or not, and their security mechanisms are likely also within the reach of others, such as government agencies or malicious hackers. One particular bypass appears to bypass both the passcode lock screen as well as the fingerprint locking mechanism, to grant enterprises access to their devices while locked. But at what cost to the overall security of consumer devices?

Injecting Reveal With MobileSubstrate

On June 3, 2013 by Jonathan ZdziarskiReveal is a cool prototyping tool allowing you to perform runtime inspection of an iOS application. At the moment, its functionality revolved primarily around user interface design, allowing you to manage user interface objects and their behavior. It is my hope that in the future, Reveal will expand to be a full featured debugging tool, allowing pen-testers to inspect and modify instance variables in memory, instantiate new objects, invoke methods, and generally hack on the runtime of an iOS application. At the moment, it’s still a pretty cool user interface design aid. Reveal is designed to be linked with your project, meaning you have to have the source code of the application you want to inspect. This is a quick little instructional on how to link the reveal framework with any existing application on your iOS device, so that you can inspect it without source.

How Juice Jacking Works, and Why It’s a Threat

On June 3, 2013 by Jonathan ZdziarskiHow ironic that only a week or two after writing an article about pair locking, we would see this talk coming out of Black Hat 2013, demonstrating how juice jacking can be used to install malicious software. The talk is getting a lot of buzz with the media, but many security guys like myself are scratching our heads wondering why this is being considered “new” news. Granted, I can only make statements based on the abstract of the talk, but all signs seem to point to this as a regurgitation of the same type of juice jacking talks we saw at DefCon two years ago. Nevertheless, juice jacking is not only technically possible, but has been performed in the wild for a few years now. I have my own juice jacking rig, which I use for security research, and I have also retrofitted my iPad Mini with a custom forensics toolkit, capable of performing a number of similar attacks against iOS devices. Juice jacking may not be anything new, but it is definitely a serious consideration for potential high profile targets, as well as for those serious about data privacy.

Free Download: iOS Forensic Investigative Methods

On May 6, 2013 by Jonathan ZdziarskiGiven the vast amount of loose knowledge now out there in the community, and the increasing number of commercial tools available to conduct both law enforcement and private sector acquisition of an iOS device, I’ve decided to make my law enforcement guide, “iOS Forensic Investigative Methods” freely available to all. The manual contains a lot

iOS Counter Forensics: Encrypting SMS and Other Crypto-Hardening

On May 2, 2013 by Jonathan ZdziarskiAs I explained in a recent blog post, your iOS device isn’t as encrypted as you think. In fact, nearly everything except for your email database and keychain can (and often is) recovered by Apple under subpoena (your device is either sent to or flown to Cupertino, and a copy of its hard drive contents are provided to law enforcement). Depending on your device model, a number of existing forensic imaging tools can also be used to scrape data off of your device, some of which are pirated in the wild. Lastly, a number of jailbreak and hacking tools, and private exploits can get access to your device even if it is protected with a passcode or a PIN. The only thing protecting your data on the device is the tiny sliver of encryption that is applied to your email database and keychain. This encryption (unlike the encryption used on the rest of the file system) makes use of your PIN or passphrase to encrypt the data in such a way that it is not accessible until you first enter it upon a reboot. Because nearly every working forensics or hacking tool for iOS 6 requires a reboot in order to hijack the phone, your email database file and keychain are “reasonably” secure using this better form of encryption.

While I’ve made remarks in the past that Apple should incorporate a complex boot passphrase into their iOS full disk encryption, like they do with File Vault, it’s fallen on deaf ears, and we will just have to wait for Apple to add real security into their products. It’s also beyond me why Apple decided that your email was the only information a consumer should find “valuable” enough to encrypt. Well, since Apple doesn’t have your security in mind, I do… and I’ve put together something you can do to protect the remaining files on your device. This technique will let you turn on the same type of encryption used on your email index for other files on the device. The bad news is that you have to jailbreak your phone to do it, which reduces the overall security of the device. The good news is that the trade-off might be worth it. When you jailbreak, not only can unsigned code run on the device, but App Store applications running inside the sandbox will have access to much more personal data they previously didn’t have access to, due to certain sandbox patches that are required in order to make the jailbreak work. This makes me feel uneasy, given the amount of spyware that’s already been found in the App Store… so you’ll need to be careful what you install if you’re going to jailbreak. The upside is that , by protecting other files on your device with Data-Protection encryption, forensic recovery will be highly unlikely without knowledge of (or brute forcing) your passphrase. Files protected this way are married to your passphrase, and so even with physical possession of your device, it’s unlikely they’d be recoverable.