How App Store Apps are Hacked on Non-Jailbroken Phones

On October 10, 2014 by Jonathan ZdziarskiThis brief post will show you how hackers are able to download an App Store application, patch the binary, and upload it to a non-jailbroken device using its original App ID, without the device being aware that anything is amiss – this can be done with a $99 developer certificate from Apple and [optionally] an $89 disassembler. Also, with a $299 enterprise enrollment, a modified application can be loaded onto any iOS device, without first registering its UDID (great for black bag jobs and the intelligence community).

Why not to rely on self-expiring messaging apps

Now, it’s been known for quite sometime in the iPhone development community that you can sign application binaries using your own dev certificate. Nobody’s taken the time to write up exactly how people are doing this, so I thought I would explain it. This isn’t considered a security vulnerability, although it could certainly be used to load a malicious copycat application onto someone’s iPhone (with physical access). This is more a byproduct of developer signing rights on a device, after it’s been enabled with a custom developer profile. What this should be is a lesson to developers (such as Snapchat, and others who rely on client-side logic) that the client application cannot be trusted for critical program logic. What does this mean for non-technical readers? In plain English, it means that Snapchat, as well as any other self-expiring messaging app in the App Store, can be hacked (by the recipient) to not expire the photos and messages you send them. This should be a no-brainer, but it seems there is a lot of confusion about this, hence the technical explanation.

As a developer, putting your access control on the client side is taboo. Most developers understand that applications can be “hacked” on jailbroken devices to manipulate the program, but very few realize it can be done on non-jailbroken devices too. There are numerous jailbreak tweaks for unlimited skips in Pandora, to prevent Snapchat messages from expiring, and even to add favorites in your mentions on TweetBot. The ability to hack applications is why (the good) applications do it all server-side. Certain types of apps, however, are designed in such a way that they depend on client logic to enforce access controls. Take Snapchat, for example, whose expiring messages require that the client make photos inaccessible after a certain period of time. These types of applications put the end-user at risk in the sense that they are more likely to send compromising content to a party that they don’t necessarily trust – thinking, at least, that the message has to expire.

Apple’s hedged garden approach has given both end-users, and developers too, the [false] sense of security that their applications aren’t susceptible to being “hacked” as long as you don’t jailbreak. As a result, many developers have incorporated counter-jailbreaking techniques into their apps (which also can be hacked), but they assume that if the device is not jailbroken, it can be trusted. Other developers don’t even go that far, and just assume that their application will always behave the way they’ve designed it – this is often a byproduct of having very little reverse engineering experience. Here’s a brief explanation of how hackers are using Apple’s developer program to manipulate applications on trusted platforms. Again, this is nothing new, but I thought it would be a good idea to document it.

Step 1: Decrypting the Application

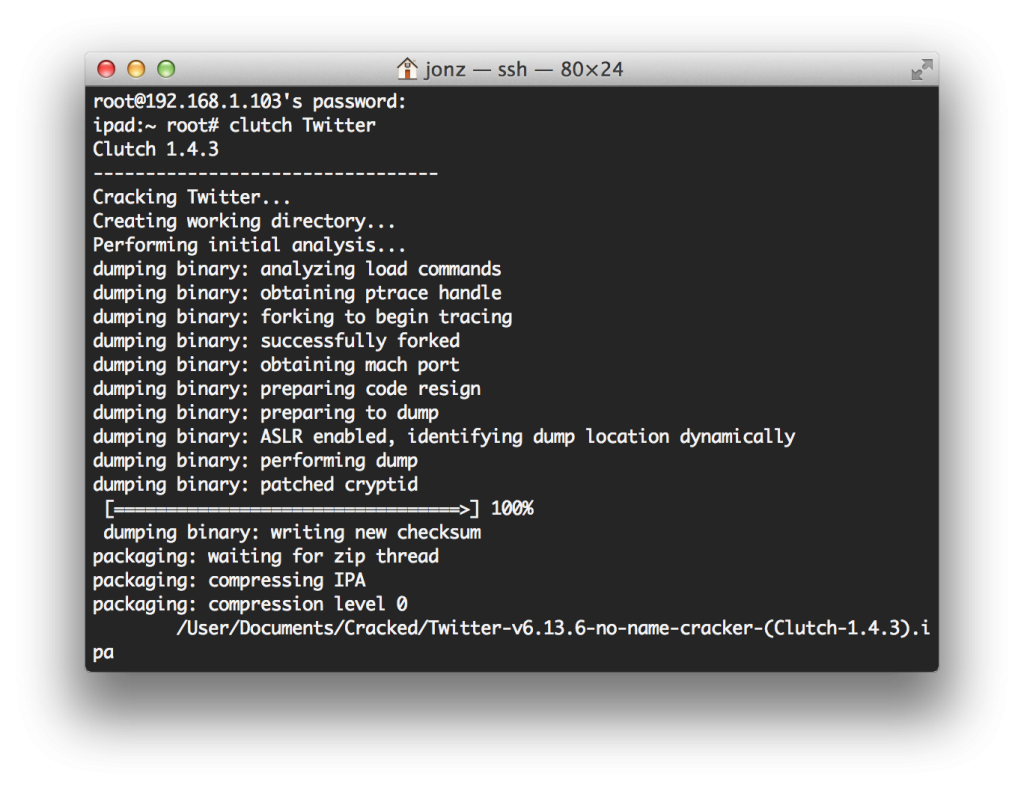

Before an application can be modified, it must first be decrypted; this is because Apple uses a form of DRM similar to FairPlay on applications. The decryption can be done by hand (the methods, I’ve outlined in my book, Hacking and Securing iOS Applications), or made simple with a number of free tools. While a hacked app can be loaded onto a non-jailbroken device, you’ll need to first use a jailbroken device to decrypt the app – this can be any device, and doesn’t need to have any personal data on it. The most popular tool for the job, used largely in the software piracy scene (although has many legitimate research uses) is Clutch: High Speed iOS Decryption System.

With one command, Clutch can decrypt and repackage the application as an .ipa (zip) file, which can then be copied over to a desktop machine for patching. When an application is decrypted, it is loaded into memory, and then attached to with a debugger before it’s run. The operating system has to decrypt it before it can run, and so by setting a breakpoint, then dumping the memory at the program’s start address, you can get a complete decrypted dump of the executable code. The encrypted part of the file is then overwritten in-place with the decrypted copy, and a cryptid field is unset. From here, it’s smooth sailing.

Step 2: Patch the binary to alter behavior

After transferring the decrypted .ipa file back over to the desktop, it can be unzipped, and the application can be found in its Payload directory. Depending on the kind of functionality the attacker is looking for, the binary can then be patched to change its behavior. In the case of Snapchat (and other applications that claim self-expiring messages), the application’s timer can be fudged with to prevent any messages from ever expiring. This is the same with virtually every other self-expiring messaging program out there. Even the well respected ones must have code to expire the messages on the client side; it’s just a matter of finding and patching it.

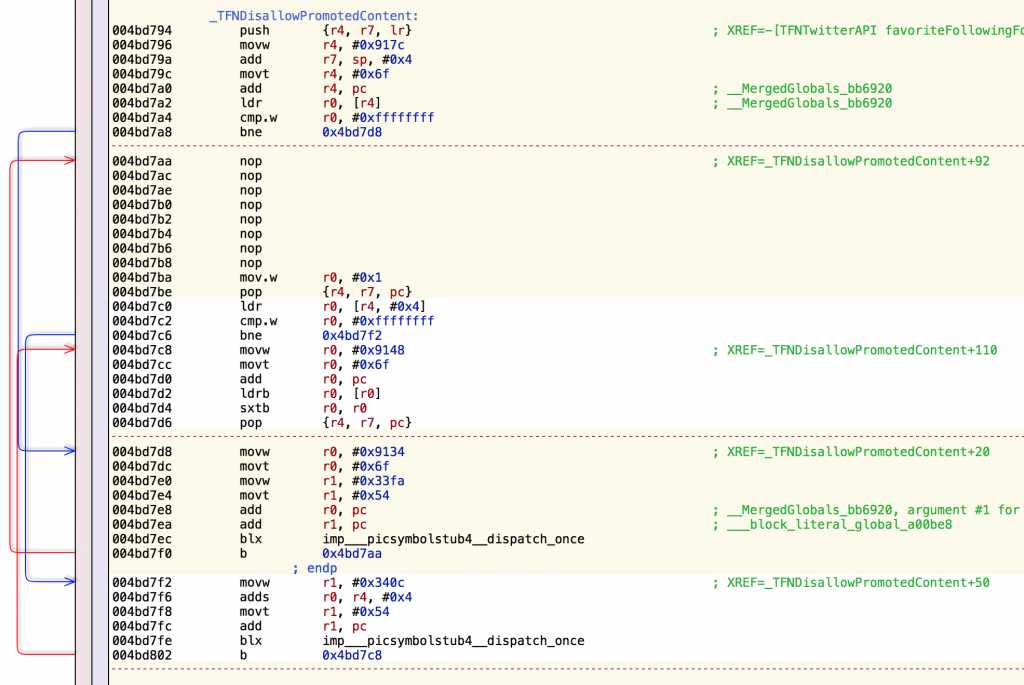

Patching can be done with an open source disassembler, although the process is made much easier with tools like Hopper, which is well worth the $89. Lets take the Twitter application, for example. Hate those pesky ads? An attacker could easily modify the binary to simply NOP certain checks, and disable them. Here’s one example, which overrides checks in a function named TNFDisallowPromotedContent, that disables ads for Twitter employees (I guess they don’t eat that part of their own dog food). All those nop commands between 0x004bd7aa and 0x004bd7b8 overwrite logic checks that would turn ads on for all non-employees of Twitter. Perhaps if Twitter employees were forced to look at their own ads, they might realize how annoying they are, and also how poor their analytics are.

Step 3: Sign the Binary With Developer Credentials and Repackage

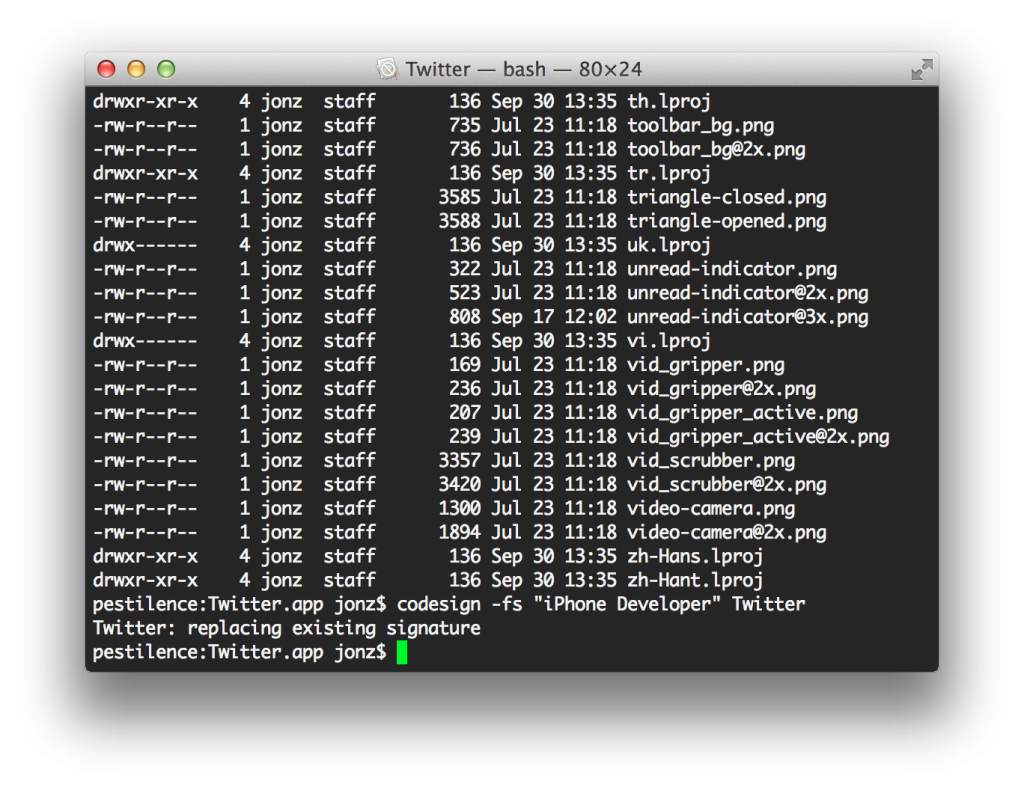

Once the binary has been modified, the original signature on it will become invalid. This is where having a $99 developer program enrollment comes into play for an attacker. The target iPhone doesn’t know the difference between the original developer’s certificate or yours, and so it can simply be re-signed using Apple’s codesign utility.

Once signed, the entire directory structure can be zipped back up and renamed .ipa.

Step 4: Copy Provisioning Profile and Application with Xcode

Using Xcode, the target device is loaded with the attacker’s provisioning profile which has registered the UDID of the device – unless they are part of Apple’s enterprise developer program. Enterprise developers (those who paid $299) get to load their applications on any iOS device without having to register the UDID first. The application can then simply be dragged onto the phone from Xcode’s organizer window, and it will pop up on the target phone. It will look and function just like the original application on the phone, and the user will be none the wiser. In fact, the App Store application will even check for updates to the software, believing the official app is installed (unless an attacker changes the identifier). My patched Twitter app works fine with push notifications and all other features, but suppresses all advertising.

Implications

The ability to override program logic can allow for a number of potential risks that developers need to be aware of. This type of attack could be used to prevent incoming photos or messages from ever expiring, or allow a screenshot to be used, etc. Going beyond Snapchat style apps, attacks against payment processing apps, banking apps, or even mobile device apps could all potentially be performed to attempt to access data that the end user shouldn’t otherwise have access to.

Consider this (real world) vulnerability that existed at one time: a certain payment processing application that kept a log of transactions on the client, and relied on client logic to limit the refund amount to not exceed the purchase amount. This vulnerability allowed an attacker to steal a merchant’s iOS device, patch the binary to let them charge themselves $1, then refund themselves $100,000, which drafted automatically from the merchant’s account. Such programming errors could potentially leave a small business bankrupt if gone unnoticed (and done more subtly).

Other more far fetched, but plausible attacks might include adding subroutines into code that would copy a user’s credentials or other personal data and send it to a remote server, as the user used their application. Such an attack would require physical access to the device, as well as the PIN, but are possible. The user would think they’re using the same app they always use, but this technique would be used to introduce a trojan into it. For example, consider a CEO or diplomat targeted by some government; were their mobile device seized at an airport for a certain period of time, a patched copy of some secure messaging app could be loaded onto the device, replacing the existing one. Of course, none of this is easy to pull off, and requires physical access… but you get the idea.

Solution

The current way that applications are designed to install and run in iOS (including 8) allow for modified binaries to be signed by any developer. It’s a difficult problem to address, and Apple could potentially do more to mitigate the risk; but this would require a redesign, though, and possibly would not be a complete solution. Some type of trusted computing involving the secure enclave is likely going to be necessary, in addition to good design.

The first step in mitigating risk would be for Apple to implement codesign pinning, where certain app identifiers would be added to developer certs, and enforced by the OS. This, for example, would prevent anyone from using the bundle identifier com.facebook.* except for Facebook, who would have the bundle signed into their certs. This prevents identifier reuse but a bigger problem remains: how can applications (and servers they talk to) ensure that they’re talking to legitimate copies of applications remotely? For example, ensuring two Snapchat apps talking are really legit?

One idea, sent to me from Matthew Green, is for the OS to be able to inform the application somehow that it’s running under a distribution signature (that checks out with Apple’s App Store), in the form of a signed token generated in the secure element. This token can then be sent to peers for validation. For example: Alice wants to sent Bob a picture. Alice initiates a request to authenticate Bob’s application. Bob’s application talks to iOS via a framework, which examines the application’s signature and verifies it’s a valid App Store distribution signature. It then signs a token and provides that back to the application, which gets sent to Alice’s device. Alice’s device must have a mechanism to authenticate that token in her own device’s secure element, then can tell the application to go forward with the transfer. If the authentication fails, Alice’s phone refuses to send the photo.

Another idea I had, which I think is similar to Matthew’s, is to supply developers with some extensions to the existing common crypto framework, where developers can marry a session key to a special key inside the secure enclave, through some form of key derivation function. This derived key will only be valid if the calling application can be verified by the OS as having a valid distribution signature. This way, the server can depend on encryption succeeding only if it has gone through the secure element, and subsequently from a trusted (verified) application. Somehow the server side will need to coordinate this, possibly through seeding via the Apple-issued distribution certificates.

These ideas may mitigate binary patches but you’d still have to contend with runtime abuse on Jailbroken phones. For this, Apple needs to perform proper jailbreak detection from within the secure element.

Conclusion

This issue isn’t going to go away any time soon, and desktop machines and other operating systems are even more susceptible to running modified binaries (this is how malware / trojans often work). Developers simply need to be aware that these technical capabilities are possible, and stop trusting Apple’s “hedged garden” and “sandbox” to protect all of their application logic. Execution space on client machines has never been considered secure, and never should. Unfortunately, it seems that developers are starting to slip in their secure programming practices.

The moral of the story for developers is simply this: don’t trust end users, and that includes your code running on their devices.

The moral of the story for end users is: don’t trust developers, and refrain from sending content to people whom you don’t trust.

As is the case with any third party application in the App Store, they can be hacked. Whether it’s self-expiring photos or other data, anything you send is at the mercy of the recipient.

You might also want to read What You Need to Know About WireLurker, which explains how malware has used this technique in conjunction with a hijacked pair record to install decoy apps on iPhones. The real vulnerability in this case was the Trojan in pirated Chinese software, however this technique combined with the Trojan and a little social engineering made for an interesting attack.

Archives

- March 2024

- October 2023

- July 2023

- May 2023

- February 2023

- December 2022

- November 2022

- July 2022

- May 2022

- March 2022

- January 2022

- December 2021

- November 2021

- September 2021

- July 2021

- December 2020

- November 2020

- March 2020

- September 2019

- August 2019

- August 2018

- March 2018

- March 2017

- February 2017

- January 2017

- November 2016

- October 2016

- July 2016

- April 2016

- March 2016

- February 2016

- June 2015

- March 2015

- February 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014

- January 2014

- October 2013

- September 2013

- June 2013

- May 2013

- April 2013

- December 2012

- May 2012

- September 2011

- June 2011

- August 2010

- July 2010

- May 2010

- April 2010

- February 2010

- July 2009

- May 2008

- March 2008

- January 2008

- June 2007

- August 2006

- February 2006

Calendar

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 | 31 | ||