Counter-Forensics: Pair-Lock Your Device with Apple’s Configurator

On September 27, 2014 by Jonathan ZdziarskiLast updated for iOS 8 on September 28, 2014

As it turns out, the same mechanism that provided iOS 7 with a potential back door can also be used to help secure your iOS 7 or 8 devices should it ever fall into the wrong hands. This article is a brief how-to on using Apple’s Configurator utility to lock your device down so that no other devices can pair with it, even if you leave your device unlocked, or are compelled into unlocking it yourself with a passcode or a fingerprint. By pair-locking your device, you’re effectively disabling every logical forensics tool on the market by preventing it from talking to your iOS device, at least without first being able to undo this lock with pairing records from your desktop machine. This is a great technique for protecting your device from nosy coworkers, or cops in some states that have started grabbing your call history at traffic stops.

With iOS 8’s new encryption changes, Apple will no longer service law enforcement warrants, meaning these forensics techniques are one of just a few reliable ways to dump forensic data from your device (which often contains deleted records and much more than you see on the screen). Whatever the reason, pair locking will likely leave the person dumbfounded as to why their program doesn’t work, and you can easily just play dumb while trying not to snicker. This is an important step if you are a journalists, diplomat, security researcher, or other type of individual that may be targeted by a hostile foreign government. It also helps protect you legally, so that you don’t have to be put in contempt of court for refusing to turn over your PIN. The best thing about this technique is, unlike my previous technique using pairlock, this one doesn’t require jailbreaking your phone. You can do it right now with that shiny new device.

How to Help Secure Your iPhone From Government Intrusions

On September 27, 2014 by Jonathan ZdziarskiThere’s been a lot of confusion about Apple’s recent statements in protecting iOS 8 data, supposedly stifling law enforcement’s ability to do their job. FBI boss James Comey has publicly criticized Apple, and essentially blamed them for the next hundred children who get kidnapped. While Apple’s new security improvements have made it a lot harder to get to certain types of data, it’s important to note that there are still a number of techniques that can be employed against iOS 8, with varying levels of success. Most of these are techniques that law enforcement is already doing. Some are part of commercial forensics tools such as Oxygen and Cellebrite. The FBI is undoubtedly aware of them. I’ll outline some of the most common ones here.

I’ve included some tips for those of us who are concerned about data security. Security researchers, journalists, law abiding activists, diplomats, and many other types of high profile individuals should all be practicing good data security, especially when abroad. Foreign governments are just as capable of performing the same forensics techniques that our own government is capable of, and there is an overwhelming amount of information suggesting that all of these classes of individuals have been targeted by foreign governments.

The Politics Behind iPhone Encryption and the FBI

On September 25, 2014 by Jonathan ZdziarskiApple’s new policy about law enforcement is ruffling some feathers with FBI, and has been a point of debate among the rest of us. It has become such because it’s been viewed as just that – a policy – rather than what it really is, which is a design change. With iOS 8, Apple has finally brought their operating system up to what most experts would consider “acceptable security”. My tone here suggests that I’m saying all prior versions of iOS had substandard security – that’s exactly what I’m saying. I’ve been hacking on the phone since they first came out in 2007. Since the iPhone first came out, Apple’s data security has had a dismal track record. Even as recent as iOS 7, Apple’s file system left almost all user data inadequately encrypted (or protected), and often riddled with holes – or even services that dished up your data to anyone who knew how to ask. Today, what you see happening with iOS 8 is a major improvement in security, by employing proper encryption to protect data at rest. Encryption, unlike people, knows no politics. It knows no policy. It doesn’t care if you’re law enforcement, or a criminal. Encryption, when implemented properly, is indiscriminate about who it’s protecting your data from. It just protects it. That is key to security.

Up until iOS 8, Apple’s encryption didn’t adequately protect users because it wasn’t designed properly (in my expert opinion). Apple relied, instead, on the operating system to protect user data, and that allowed law enforcement to force Apple to dump what amounted to almost all of the user data from any device – because it was technically feasible, and there was nobody to stop them from doing it. From iOS 7 and back, the user data stored on the iPhone was not encrypted with a key that was derived from the user’s passcode. Instead, it was protected with a key derived from the device’s hardware… which is as good as having no key at all. Once you booted up any device running iOS 7 or older, much of that user data could be immediately decrypted in memory, allowing Apple to dump it and provide a tidy disk image to the police. Incidentally, it also allowed a number of hackers (including criminals) to read it.

iOS 8 Protection Mode Bug: Some User Files At Risk of Exposure

On September 24, 2014 by Jonathan ZdziarskiApple’s recent security announcement suggested that they no longer have the ability to dump your content from iOS 8 devices:

“On devices running iOS 8, your personal data such as photos, messages (including attachments), email, contacts, call history, iTunes content, notes, and reminders is placed under the protection of your passcode. Unlike our competitors, Apple cannot bypass your passcode and therefore cannot access this data. So it’s not technically feasible for us to respond to government warrants for the extraction of this data from devices in their possession running iOS 8.”

It looks like there are some glitches in this new encryption scheme, however, and some of the files being stored on your iOS 8 device are not getting encrypted in this way. If you copy files over to your device using iTunes’ “File Sharing” feature or sync videos that appear in the “Home Videos” section of iOS, these files are not getting placed under the protection of your passcode. Theoretically, Apple could dump these in Cupertino, if given your locked iPhone.

Your iOS 8 Data is Not Beyond Law Enforcement’s Reach… Yet.

On September 17, 2014 by Jonathan ZdziarskiIn a recent announcement, Apple stated that they no longer unlock iOS (8) devices for law enforcement.

“On devices running iOS 8, your personal data such as photos, messages (including attachments), email, contacts, call history, iTunes content, notes, and reminders is placed under the protection of your passcode. Unlike our competitors, Apple cannot bypass your passcode and therefore cannot access this data. So it’s not technically feasible for us to respond to government warrants for the extraction of this data from devices in their possession running iOS 8.”

This is a significantly pro-privacy (and courageous) posture Apple is taking with their devices, and while about seven years late, is more than welcome. In fact, I am very impressed with Apple’s latest efforts to beef up security all around, including iOS 8 and iCloud’s new 2FA. I believe Tim Cook to be genuine in his commitment to user privacy; perhaps I’m one of the few who can see just how gutsy this move with iOS 8 is.

It’s important to take a minute, however, to note that this does not mean that the police can’t get to your data. What Apple has done here is create for themselves plausible deniability in what they will do for law enforcement. If we take this statement at face value, what has likely happened in iOS 8 is that photos, messages, and other sensitive data, which was previously only encrypted with hardware-based keys, is now being encrypted with keys derived from a PIN or passcode. No doubt this does improve security for everyone, by marrying encryption to the PIN (something they ought to have been doing all along). While it’s technically possible to brute force a PIN code, that doesn’t mean it’s technically feasible, and thus lets Apple off the hook in terms of legal obligation. Add a complex passcode into the mix, and it gets even uglier, having to choose any of a number of dictionary style attacks to get into your encrypted data. By redesigning the file system in this fashion (if this is the case), Apple has afforded themselves the ability to say, “the phone’s data is encrypted with a PIN or passphrase, and so we’re not legally required to hack it for you guys, so go pound sand”. I am quite impressed, Mr. Cook! That took courage… but it does not mean that your data is beyond law enforcement’s reach.

Is Apple’s new 2FA Really Secure? (Answer: It’s Pretty Solid)

On September 17, 2014 by Jonathan ZdziarskiI’ve recently updated my TL;DR regarding the recent celebrity iCloud hacks. I now summarize Apple’s latest changes to improve their 2-factor authentication (2FA) . Apple has implemented not just a band-aid, but a very good security solution to protect iCloud accounts, by completely reinventing their own 2-step validation (sorry, I couldn’t resist). As a result, users who have activated this feature will need to provide a one-time validation code in order to access their iCloud account from a web browser, or to provision iCloud from an iOS device. As my TL;DR suggests, this new technical measure would have prevented the celebrity iCloud hacks. So are Apple’s new techniques really secure, even in light of the very technically un-savvy users who fall victim to iCloud phishing attacks?

While Apple has done their part to improve the security of iCloud, less than savvy users can still screw it up. First of all, by not having the feature turned on in the first place. Apple’s two-step validation process is opt-in, and therefore it’s important to make sure that users know about and understand the benefits to enabling this feature. In my opinion, Apple should force users to have this feature on if they enable Photo Stream or iCloud Backups, as they are likely to keep sensitive content in the cloud without necessarily knowing it.

So you’re more savvy than that. You’ve already activated the new 2FA on your iCloud account. Are you truly safe from future phishing attacks?

Apple Addresses iOS Surveillance and Forensics Vulnerabilities

On September 9, 2014 by Jonathan ZdziarskiAfter some preliminary testing, it appears that a number of vulnerabilities reported in my recent research paper and subsequent talk at HOPE/X have been addressed by Apple in iOS 8. The research outlined a number of risks for wireless remote surveillance, deep logical forensics, and other types of potential privacy intrusions fitting certain threat models such as high profile diplomats or celebrities, targeted surveillance, or similar threats.

Given that Apple has dropped the NDA for iOS 8, it appears that I can write freely about the improvements they’ve made to address the vulnerabilities I’ve outlined in my paper. Here’s a summary of what’s been fixed, what risks still remain, and some steps you can take to help protect the data on your device.

TL;DR: Hacked Celebrity iCloud Accounts

On September 2, 2014 by Jonathan Zdziarski(This document will continue to evolve as more information becomes available)

Earlier this week, a number of compromised celebrity iCloud accounts were leaked onto the Internet. Initially, @SwiftOnSecurity was kind enough to post some metadata at my request for exif information on two of the accounts’ files, and I’ve since gathered much more information including directory structures, file naming schemes, additional timestamp data, and other information through private channels.

An Example of Forensic Science at its Worst: US v. Brig. Gen. Jeffrey Sinclair

On August 24, 2014 by Jonathan ZdziarskiIn early 2014, I provided material support in what would end up turning around what was, in their own words, the US Army’s biggest case in a generation, and much to the dismay of the prosecution team that brought me in to assist them. In the process, it seems I also prevented what the evidence pointed to as an innocent man, facing 25 years in prison, from becoming a political scapegoat. Strangely, I was not officially contracted on the books, nor was I asked to sign any kind of NDA or exposed to any materials marked classified (nor did I have a clearance at that time), so it seems safe to talk about this, and I think it’s an important case.

While I would have thought other cases like US v. Manning would have been considered more important than this to the Army (and certainly to the public), this case – US v. Brig. Gen. Jeffrey Sinclar with the 18th Airborne Corps – could have seriously affected the Army directly, and in a more severe way. It was during this case that President Obama was doing his usual thing of making strongly worded comments with no real ideas about how to fix anything – this time against sexual abuse in the military. Simultaneously, however, the United States Congress was getting ramped up to vote on a military sexual harassment bill. At stake was a massive power grab from congress that would have resulted in stripping the Army of its authority to prosecute sexual harassment cases and other felonies. The Army maintaining their court martial powers in this area seemed to be the driving cause that made this case vastly more important to them than any other in recent history. At the heart of prosecuting Sinclair was the need to prove that the Army was competent enough to run their own courts. With that came what appeared to be a very strong need to make an example out of someone. I didn’t have a dog in this fight at all, but when the US Army comes asking for your help, of course you want to do what you can to serve your country. I made it clear, however, that I would deliver unbiased findings whether they favored the prosecution or not. After finishing my final reports and looking at all of the evidence, followed by the internal US Army drama that went with it, it became clear that this whole thing had – up until this point – involved too much politics and not enough fair trial.

White Paper: Identifying back doors, attack points, and surveillance mechanisms in iOS devices

On August 23, 2014 by Jonathan ZdziarskiI received word from the editor-in-chief that the author of an accepted paper has permission to publish it on his website, and so I am now making my research available to anyone who wishes to read it. The following paper, “Identifying back doors, attack points, and surveillance mechanisms in iOS devices” first appeared published in The International Journal of Digital

Security Firm Stroz Friedberg Has Validated My Latest Research

On August 13, 2014 by Jonathan ZdziarskiSecurity firm Stroz Friedberg has published findings validating the technical claims of my latest research, by independently reproducing them against iOS 7 and iOS 8 Beta 4 (NOTE: as I mentioned, Apple has already begun addressing these issues in Beta 5). Interestingly, the firm has also published an open-source proof of concept tool named unTRUST

A Post-Mortem on ZDNet’s Smear Campaign

On August 6, 2014 by Jonathan ZdziarskiA few days after I gave a talk at the HOPE/X conference titled, “Identifying Backdoors, Attack Points, and Surveillance Mechanisms in iOS Devices”, ZDNet published what their senior editor has described privately to me as an opinion piece, however passed it off as a factual article in an attempt to make headlines at my expense. Now that things have had time to settle down, I’ve taken the time to calmly write up a post-mortem describing what actually happened as well as some behind-the-scenes details that may shed some light on the drama we’ve seen from ZDNet and one of its writers over the past couple of weeks. Let me say first that this is the last time I will address this matter, and have no desire to continue to discuss it, or engage with ZDNet or their writer. In fact, I haven’t engaged with either parties since this all transpired a week or so after my talk, in spite of repeated attempts to bait me with more personal attacks and false claims of harassment.

At HOPE/X, I gave a very carefully-worded talk describing a number of “high value forensic services” that had not been disclosed by Apple to the consumer (some not even to developers), such as the com.apple.mobile.file_relay service, which I admitted to the audience as having “no better word for” to describe than as a “backdoor” to bypass the consumer’s backup encryption on iOS devices; this doesn’t necessarily mean a nefarious backdoor, but can simply be an engineering backdoor, like how supervisor passwords or other mechanisms work – a simple bypass to make things convenient. A number of news agencies reached out to me, and I took time to explain to each journalist that this was nothing to panic about, as the threat models were very limited (specifically geared towards law enforcement forensics and potentially foreign espionage). Also, that I did not believe there was any conspiracy here by Apple. Reporters from ARS Technica, Reuters, The Register, Tom’s Guide, InfoSec Institute, and a number of others spoke to me and got all the time they wanted. You can see that these journalists each published relatively balanced and non-alarmist stories; even The Register, who prides themselves on outlandish headlines, if you read their story, was actually quite level headed about the matter. A number of other news agencies, who had not reached out to me, published sensationalist stories with crazy claims of an NSA conspiracy, secret backdoors, and other ridiculous nonsense. I tried very hard to throw cold water on those ideas both in my talk and in big letters on my first blog entry, with”DON’T PANIC” and instructions for journalists.

ZDNet was among the news agencies that had initially published a sensationalist story without approaching me first for questions.

Apple is Making Progress

On August 5, 2014 by Jonathan ZdziarskiApple’s new, relaxed NDA rules appear to allow me to talk about the iOS 8 betas. I will hold off on the deep technical details until the final release, as I see that Apple is striving to make a number of improvements to the overall security of their product. What I will say is that so far, things look quite promising. Shortly after my talk at HOPE/X, citing my paper, “Identifying Backdoors, Attack Points, and Surveillance Mechanisms in iOS Devices”, along with a proof of concept, Apple released Beta 5, and a number of the “high value forensic services” I’d outlined in my paper have now been disabled wirelessly, including the packet sniffer service that got many upset (note: we’ve known about the packet sniffer for years, but it was never disclosed to consumers that it was active outside of developer mode). Apple’s fixes are clearly still a work in progress, and not all of my security concerns have been addressed yet, but it does show that Apple does care about the security of their product, and likely wants to prevent their APIs from being abused by both malicious hackers and government. Given that a number of my threat models involved government spying, it feels good to know that Apple has taken my research seriously enough to address these concerns. Keep in mind, the threat model we’re dealing with also includes foreign governments, many of which have long histories of spying on our country’s diplomats. I’ve instructed a number of counter-forensics classes to diplomatic infosec personnel, and the threats of spying on data are very real for these people, to the degree that a lot of cloak-and-dagger goes into play on both sides, especially when visiting technologically hostile countries.

Apple’s Authentication Scheme and “Backdoors” Discussion

On July 31, 2014 by Jonathan ZdziarskiI’ve heard a number of people make an argument about Apple’s authentication front-ending the services I’ve described in my paper, including the “file relay” service, which has opened up a discussion about the technical definition of a backdoor. The primary concern I’m hearing, including from Apple, is that the user has to authenticate before having access to this service, which one would normally expect would preclude a service from being a backdoor by some (but not all) definitions. This is a valid point, and in fact I acknowledge this thoroughly in my paper. Let me explain, however, why this argument about authentication is more complicated and subtle than it seems.

Most authentication schemes are encapsulated from weakest to strongest, and are also isolated from one another; certain credentials get you into certain systems, but not into others. You may have a separate password for Twitter, Facebook, or other accounts, and they only interoperate if you’re using a single sign-on mechanism (for example, OAuth) to use that same set of credentials on other sites. If one gets stolen, then, only the services that are associated with those credentials can be accessed. Those authentication mechanisms are often protected with even stronger authentication systems. For example, your password might be stored on Apple’s keychain, which is protected with an encryption that is tied directly to your desktop password. Your entire disk might also be encrypted using full disk encryption, which protects the keychain (and all of your other data) with yet another (usually stronger) password. So you end up with a hierarchy of authentication mechanisms that get protected by stronger authentication mechanisms, and sometimes even stronger ones on top of that. Apple’s authentication scheme for iOS, however, is the opposite of this, where the strongest forms of authentication are protected by the weakest – creating a significant security problem in their design. The way Apple has designed the iOS authentication scheme is that the weakest forms of authentication have complete control to bypass the stronger forms of authentication. This allows services like file relay, which bypasses backup encryption, to be accessed with the weakest authentication mechanisms (PIN or pair record), when end-users are relying on the stronger “backup encryption password” to protect them.

Oxygen Forensics: Latest Forensics Tool to Exploit Apple’s “Diagnostic Service” to Bypass Encryption

On July 31, 2014 by Jonathan ZdziarskiWhile Apple’s claims may be that a key subject of my talk, “Identifying Backdoors, Attack Points, and Surveillance Mechanisms in iOS Devices” (com.apple.mobile.file_relay) is for diagnostics, a recent announcement from the makers of the fantastic Oxygen Forensics suite shows strong evidence that law enforcement forensics is continuing to take every legal technical option available to

Roundup of iOS Backdoor (AKA “Diagnostic Service”) Related Tech Articles

On July 28, 2014 by Jonathan ZdziarskiThere are a lot of terrible news articles out there, and a lot of terrible “journalists” who have either over-hyped my research, or dismissed it entirely. After ZDNet’s utterly horrible diatribe about my research, I posted a proof-of-concept to help further clarify that was and wasn’t possible. Unfortunately, the FUD has continued, and so I

Dispelling Confusion and Myths: iOS Proof-of-Concept

On July 25, 2014 by Jonathan ZdziarskiHere’s my iOS Backdoor Proof-of-Concept:

http://youtu.be/z5ymf0UsEuw

When I originally gave my talk, it was to a small room of hackers at a hacker conference with a strong privacy theme. With two hours of content to fit into 45 minutes, I not only had no time to demo a POC, but felt that demonstrating a POC of the personal data you could extract from a locked iOS device might be construed as attempting to embarrass Apple or to be sensationalist. After the talk, I did ask a number of people that I know attended if they felt I was making any accusations or outrageous statements, and they told me no, that I presented the information and left it to the audience to draw conclusions. They also mentioned that I was very careful with my wording, so as not to attempt to alarm people. The paper itself was published in a reputable forensics journal, and was peer-reviewed, edited, and accepted as an academic paper. Both my paper and presentation made some very important security and privacy concerns known, and the last thing I wanted to do was to fuel the fire for conspiracy theorists who would interpret my talk as an accusation that Apple is working with NSA. The fact is, I’ve never said Apple was conspiring secretly with any government agency – that’s what some journalists have concluded, and with no evidence mind you. Apple might be, sure, but then again they also might not be. What I do know is that there are a number of laws requiring compliance with customer data, and that Apple has a very clearly defined public law enforcement process for extracting much the same data off of passcode-locked iPhones as the mechanisms I’ve discussed do. In this context, what I deem backdoors (which Apple claims are for their own use), attack points, and so on become – yes suspicious – but more importantly abuse-prone, and can and have been used by government agencies to acquire data from devices that they otherwise wouldn’t be able to access with forensics software. As this deals with our private data, this should all be very open to public scrutiny – but some of these mechanisms had never been disclosed by Apple until after my talk.

Apple Confirms “Backdoors”; Downplays Their Severity

On July 23, 2014 by Jonathan ZdziarskiApple responded to allegations of hidden services running on iOS devices with this knowledge base article. In it, they outlined three of the big services that I outlined in my talk. So again, Apple has, in a traditional sense, admitted to having backdoors on the device specifically for their own use.

A backdoor simply means that it’s an undisclosed mechanism that bypasses some of the front end security to make access easier for whoever it was designed for (OWASP has a great presentation on backdoors, where they are defined like this). It’s an engineering term, not a Hollywood term. In the case of file relay (the biggest undisclosed service I’ve been barking about), backup encryption is being bypassed, as well as basic file system and sandbox permissions, and a separate interface is there to simply copy a number of different classes of files off the device upon request; something that iTunes (and end users) never even touch. In other words, this is completely separate from the normal interfaces on the device that end users talk to through iTunes or even Xcode. Some of the data Apple can get is data the user can’t even get off the device, such as the user’s photo album that’s synced from a desktop, screenshots of the user’s activity, geolocation data, and other privileged personal information that the device even protects from its own users from accessing. This weakens privacy by completely bypassing the end user backup encryption that consumers rely on to protect their data, and also gives the customer a false sense of security, believing their personal data is going to be encrypted if it ever comes off the device.

Apple Responds

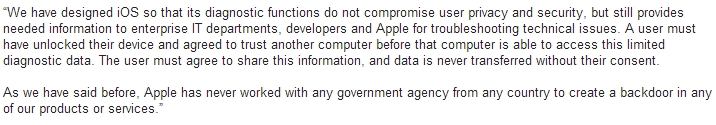

On July 21, 2014 by Jonathan ZdziarskiIn a response from Apple PR to journalists about my HOPE/X talk, it looks like Apple might have inadvertently admitted that, in the most widely accepted sense of the word, they do indeed have backdoors in iOS, however claim that the purpose is for “diagnostics” and “enterprise”.

The problem with this is that these services dish out data (and bypass backup encryption) regardless of whether or not “Send Diagnostic Data to Apple” is turned on or off, and whether or not the device is managed by an enterprise policy of any kind. So if these services were intended for such purposes, you’d think they’d only work if the device was managed/supervised or if the user had enabled diagnostic mode. Unfortunately this isn’t the case and there is no way to disable these mechanisms. As a result, every single device has these features enabled and there’s no way to turn them off, nor are users prompted for consent to send this kind of personal data off the device.

Slides from my HOPE/X Talk

On July 18, 2014 by Jonathan ZdziarskiIdentifying Backdoors, Attack Points, and Surveillance Mechanisms in iOS Devices In addition to the slides, you may be interested in the journal paper published in the International Journal of Digital Forensics and Incident Response. Please note: they charge a small fee for all copies of their journal papers; I don’t actually make anything off of