The Dangers of the Burr Encryption Bill

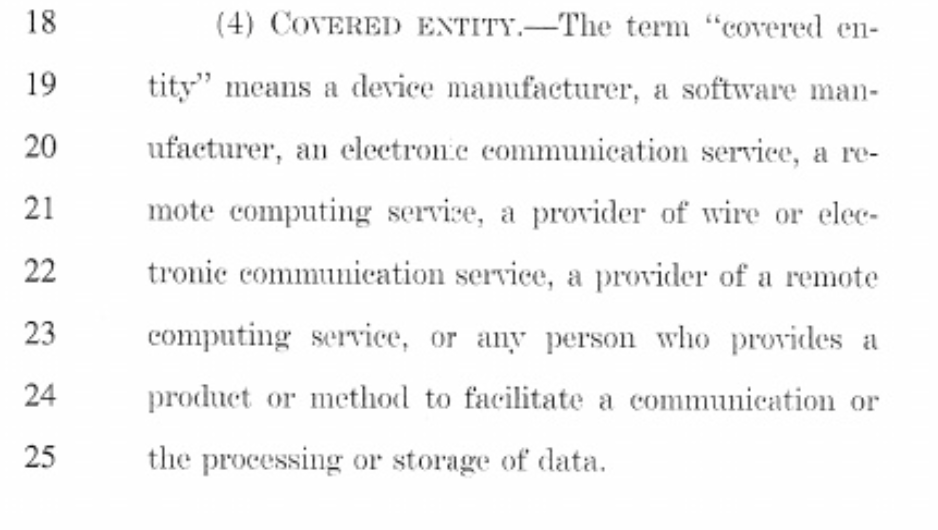

On April 8, 2016 by Jonathan ZdziarskiThe Burr Encryption Bill – Discussion Draft dropped last night, and proposes legislation to weaken encryption standards for all United States citizens and corporations. The bill itself is a hodgepodge of technical ineptitude combined with pockets of contradiction. I would cite the most dangerous parts of the bill, but the bill in its entirety is dangerous, not just for its intended uses but also for all of the uses that aren’t immediately apparent to the public.

The bill, in short, requires that anyone who develops features or methods to encrypt data must also decrypt the data under a court order. This applies not only to large companies like Apple, but could be used to punish developers of open source encryption tools, or even encryption experts who invent new methods of encryption. Its broad wording allows the government to hold virtually anyone responsible for what a user might do with encryption. A good parallel to this would be holding a vehicle manufacturer responsible for a customer that drives into a crowd. Only it’s much worse: The proposed legislation would allow the tire manufacturer, as well as the scientists who invented the tires, to be held liable as well.

An Open Letter to FBI Director James Comey

On April 7, 2016 by Jonathan ZdziarskiMr. Comey,

Sir, you may not know me, but I’ve impacted your agency for the better. For several years, I have been assisting law enforcement as a private citizen, including the Federal Bureau of Investigation, since the advent of the iPhone. I designed the original forensics tools and methods that were used to access content on iPhones, which were eventually validated by NIST/NIJ and ingested by FBI for internal use into your own version of my tools. Prior to that, FBI issued a major deviation allowing my tools to be used without validation, due to the critical need to collect evidence on iPhones. They were later the foundation for virtually every commercial forensics tool to make it to market at the time. I’ve supported thousands of agencies worldwide for several years, trained our state, federal, and military in iOS forensics, assisted hands-on in numerous high-profile cases, and invested thousands of hours of continued research and development for a suite of tools I provided at no cost – for the purpose of helping to solve crimes. I’ve received letters from a former lab director at FBI’s RCFL, DOJ, NASA OIG, and other agencies citing numerous cases that my tools have been used to help solve. I’ve done what I can to serve my country, and asked for little in return.

First let me say that I am glad FBI has found a way to get into Syed Farook’s iPhone 5c. Having assisted with many cases, I understand from firsthand experience what you are up against, and have enormous respect for what your agency does, as well as others like it. Often times it is that one finger that stops the entire dam from breaking. I would have been glad to assist your agency with this device, and even reached out to my contacts at FBI with a method I’ve since demonstrated in a proof-of-concept. Unfortunately, in spite of my past assistance, FBI lawyers prevented any meetings from occurring. But nonetheless, I am glad someone has been able to reach you with a viable solution.

How Apple Can Make Their FBI Problems Go Away

On March 31, 2016 by Jonathan ZdziarskiAn adversary has an unknown exploit, and it could be used on a large scale to attack your platform. Your threat isn’t just your adversary, but also anyone who developed or sold the exploit to the adversary. The entire chain of information from conception to final product could be compromised anywhere along the way, and sold to a nation state on the side, blackmailed or bribed out of someone, or just used maliciously by anyone with knowledge or access. How can Apple make this problem go away?

The easiest technical solution is a boot password. The trusted boot chain has been impressively solid for the past several years, since Apple minimized its attack surface after the 24kpwn exploit in the early days. Apple’s trusted boot chain consists of a multi-stage boot loader, with each phase of boot checking the integrity of the next. Having been stripped down, it is now a shell of the hacker’s smorgasbord it used to be. It’s also a very direct and visible execution path for Apple, and so if there ever is an alleged exploit of it, it will be much easier to audit the code and pin down points of vulnerability.

Why a Software Exploit Would be a Threat to Secure Enclave Devices

On March 30, 2016 by Jonathan ZdziarskiAs speculation continues about the FBI’s new toy for hacking iPhones, the possibility of a software exploit continues to be a point of discussion. In my last post, I answered the question of whether such an exploit would work on Secure Enclave devices, but I didn’t fully explain the threat that persists regardless.

For sake of argument, let’s go with the theory that FBI’s tool is using a software exploit. The software exploit probably doesn’t (yet) attack the Secure Enclave, as Farook’s 5c didn’t have one. But this probably also doesn’t matter. Let’s assume for a moment that the exploit being used could be ported to work on a 64-bit processor. The 5c is 32-bit, so this assumes a lot. Some exploits can be ported, while others just won’t work on the 64-bit architecture. But let’s assume that either the work has already been done or will be done shortly to do this; a very plausible scenario.

Viability of Software Exploit and SEP Devices

On March 29, 2016 by Jonathan ZdziarskiA number of people have been asking me my thoughts on the viability of a software exploit against Secure Enclave enabled devices, so here’s my opinion:

First, is interesting to note that the way the FBI categorizes this tool’s capabilities is “5c” and “9.0”; namely, hardware model and firmware version. They won’t confirm that it’s the only combination that the tool runs on, but have noted that these are the two factors they’re categorizing it by. This is consistent with how exploit-based forensics tools have functioned in the past. My own forensics tools (for older iPhones) came in different modules that were tailored for a specific hardware platform and firmware version. This is because most exploits require taking Apple’s own firmware and patching it; those patches require slightly different offsets in the kernel (and possibly boot loader). The software to patch is also going to be slightly different for each hardware and firmware combination. So without saying really anything, FBI has kind of hinted that this might be a software exploit. Had this been a hardware attack, such as a NAND mirroring technique, firmware version likely wouldn’t be a point of discussion, as the technique’s feasibility is dependent on hardware revision. This is all conjecture, of course, but is worth noting that the hints are already there.

If the FBI did in fact use a software exploit, the question then becomes one of how viable it is on other platforms. Typically, a software exploit of this magnitude could very well take advantage of vulnerabilities that have long existed in the firmware, making it more than likely that the exploit may also be effective (possibly with a little tailoring) to older versions of iOS. Even if the exploit today was tailored specifically for this device, adjusting offsets and patching slightly different copies of Apple’s firmware is a relatively painless process. A number of open source tools even exist to find and patch the correct bytes in decrypted Apple kernels.

FBI Breaks Into San Bernardino iPhone

On March 28, 2016 by Jonathan ZdziarskiAs expected, the FBI has succeeded in finding a method to recover the data on the San Bernardino iPhone, and now the government can see all of the cat pictures Farook was keeping on it. We don’t know what method was used, as it’s been classified. Given the time frame and the details of the case, it’s possible it could have been the hardware method (NAND mirroring) or a software method (exploitation). Many have speculated on both sides, but your guess is as good as mine. What I can tell you are the implications.

My Take on FBI’s “Alternative” Method

On March 24, 2016 by Jonathan ZdziarskiFBI acknowledged today that there “appears” to be an alternative way into Farook’s iPhone 5c – something that experts have been shouting for weeks now; in fact, we’ve been saying there are several viable methods. Before I get into which method I think is being used here, here are some possibilities of other viable methods and why I don’t think they’re part of the solution being utilized:

- A destructive method, such as de-capping or deconstruction of the microprocessor would preclude FBI from being able to come back in two weeks to continue proceedings against Apple. Once the phone is destroyed, there’s very little Apple can do with it. Apple cannot repair a destroyed processor without losing the UID key in the process. De-capping, acid and lasers, and other similar techniques are likely out.

- We know the FBI hasn’t been reaching out to independent researchers, and so this likely isn’t some fly-by-night jailbreak exploit out of left field. If respected security researchers can’t talk to FBI, there’s no way a jailbreak crew is going to be allowed to either.

- An NSA 0-day is likely also out, as the court briefs suggested the technique came from outside USG.

- While it is possible that an outside firm has developed an exploit payload using a zero-day, or one of the dozens of code execution vulnerabilities published by Apple in patch releases, this likely wouldn’t take two weeks to verify, and the FBI wouldn’t stop a full court press (literally) against Apple unless the technique had been reported to have worked. A few test devices running the same firmware could easily determine such an attack would work, within perhaps hours. A software exploit would also be electronically transmittable, something that an outside firm could literally email to the FBI. Even if that two weeks accounted for travel, you still don’t need anywhere near this amount of time to demonstrate an exploit. It’s possible the two weeks could be for meetings, red tape, negotiating price, and so on, but the brief suggested that the two weeks was for verification, and not all of the other bureaucracy that comes after.

- This likely has nothing to do with getting intel about the passcode or reviewing security camera footage to find Farook typing it in at a cafe; the FBI is uncertain about the method being used and needs to verify it. They wouldn’t go through this process if they believed they already had the passcode in their possession, unless it was for fasting and prayer to hope it worked.

- Breaking the file system encryption on one of NSA/CIAs computing clusters is unlikely; that kind of brute forcing doesn’t give you a two week heads-up that it’s “almost there”. It can also take significantly longer – possibly years – to crack.

- Experimental techniques such as frankensteining the crypto engine or other potentially niche edge techniques would take much longer than two weeks (or even two months) to develop and test, and would likely also be destructive.

iOS / OS X and Forensics Trace Leakage

On March 22, 2016 by Jonathan ZdziarskiAs I sit here, trying to be the good parent in reviewing my daughter’s text messages, I have to assume that I’ve made my kids miserable by having a father with DFIR skills. I’m able to dump her device wirelessly using a pairing record and a tool I designed to talk to the phone. I generate a wireless desktop backup, decrypt it, and this somehow makes my kids safer. What bothers me, as a parent, is the incredible trove of forensic artifacts that I find in my children’s data every month. Deleted messages, geolocation information, even drafts and thumbnails that had all been deleted months ago. Thousands of messages sometimes. In 2008, I wrote a small book with O’Reilly named iPhone Forensics that detailed this forensic mess. Apple has made some improvements, but the overall situation has gotten worse since then. The biggest reason the iPhone is so heavily targeted for forensics and intelligence is because of the very wide data recovery surface it provides: it’s a forensics gold mine.

To Apple’s credit, they’ve done a stellar job of improving the security of iOS devices in general (e.g. the “front door”), but we know that they, just like every other manufacturer, is still dancing on the lip of the volcano. Aside from the risk of my device being hacked, there’s a much greater issue at work here. Whether it’s a search warrant, an unlawful traffic stop in Michigan, someone stealing my backups, an ex-lover, or just leaving my phone unlocked on accident at my desk, it only takes a single violation of the user’s privacy to obtain an entire lengthy history of private information that was thought deleted. This problem is also prevalent in desktop OS X.

Free Software Always Costs Something

On March 19, 2016 by Jonathan ZdziarskiBack in the late 1960s, University of California, Berkeley, published its first public BSD licenses promoting free software that could be reused by anyone. A few years later, in the 70s, BSD Unix was released by CSRG, a research group inside of Berkeley, and laid the foundation for many operating systems (including Mac OS X) as we know it today. It gradually evolved over time to support socket models, TCP/IP, Unix’s file model, and a lot more. You’ll find traces of all of these principals – and very often, core code itself, still used 50 years later in cutting edge operating systems. The idea of “free software” (whether “free as in beer” or “free as in freedom”) is credited as a driving force behind today’s technology, multi-billion dollar fortune companies, and even the iPhone or Android device sitting in your pocket. Here’s the rub: None of it was ever really free.

AceDeceiver: Breaking Apple’s Cryptographic Leash

On March 16, 2016 by Jonathan ZdziarskiThe past week, I’ve been writing all about cryptographic leashes and how they could be easily broken in the case of controlling FBI’s iOS backdoor. Surprisingly, the first serious example of this has surfaced this week. The researchers at Palo Alto Networks, who have been killing it lately with great iOS research, did a breakdown of a piece of Chinese malware known as AceDeceiver. AceDeceiver breaks the cryptographic leash baked into the iPhone’s App Store system, allowing an attacker to install applications on the host iPhone even after they’ve been revoked by Apple. While the malware, in its present form, isn’t likely to cause widespread damage, the vulnerabilities in Apple’s DRM that this presents could be used for far more malicious purposes.

AceDeceiver starts its life as malware on your desktop. In its present form, you’d have to be dumb enough to install a Chinese pirate app store in order to have to worry about this, but in a more malicious form, something like it could potentially be embedded as a trojan in legitimate software. The malware performs a man-in-the-middle attack between your computer and the App Store, and fudges the authorizations used to let your iPhone run purchased software. Think of the attack as forging a receipt, like paying for a set of towels at Target, then returning a different set. Apple has no way to check the towels (your apps) to make sure they’re the same ones, so the iPhone lets the app run since you have a valid receipt. It’s even worse than this, because the receipts aren’t tied to your iTunes account – you can pull someone else’s receipt out of the trash and return towels you never purchased. It’s this receipt that is re-used to install the malware’s own software on your iPhone by impersonating iTunes. The malware author can use his or her own receipts to load previously approved App Store software onto your phone.

Apple vs. FBI: Where We Are Now, and Where We’re Going

On March 15, 2016 by Jonathan ZdziarskiMuch has happened since a California magistrate court originally granted an order for Apple to assist the FBI under the All Writs Act. For one, most of us now know what the All Writs Act is: An ancient law that was passed before the Fourth Amendment even existed, now somehow relevant to modern technology a few hundred years later. Use of this act has exploded into a legal argument about whether or not it grants carte blanche rights of the government to demand anything and everything from private companies (and incidentally, individuals) if it helps them prosecute crimes. Of course, that’s just the tip of the iceberg. We’ve seen strong debates about whether any person should be allowed to have private conversations, thoughts, or ideas that can’t later be searched, whether forcing others to work for the government violates the constitution, whether other countries will line up to exploit technology if America does, and ultimately – at the heart of all of these – whether fear of the word “terrorism” is enough to cause us all to burn our constitution.

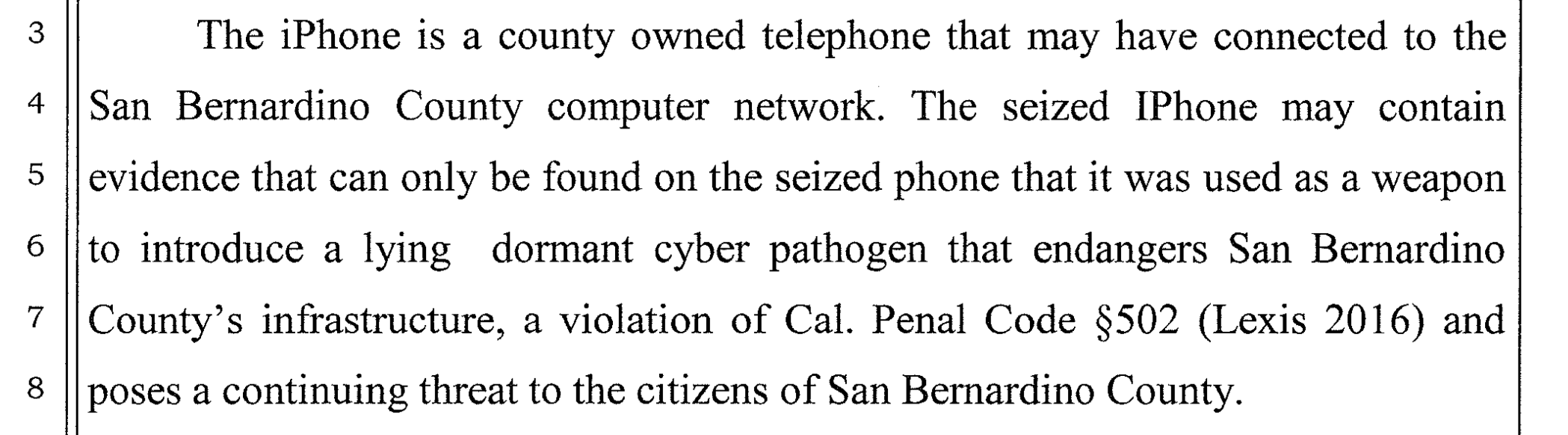

Over the past few weeks, the entire tech community has gotten behind Apple, filing a barrage of friend-of-the-court briefs on Apple’s behalf. Security experts such as myself, Crypto “Rock Stars”, constitutionalists, technologists, lawyers, and 30 Helens all agree that Apple is in the right, and that backdooring iOS would cause irreparable damage to the security, privacy, and safety of hundreds of millions of diplomats, judges, doctors, CEOs, teenage girls, victims of crimes, parents, celebrities, politicians, and all men and women around the world. Throughout the past month, legal exchanges have escalated from ice cold to white hot, and from professional to a traveling flea circus as ridiculous terms such as “lying dormant cyber pathogen” have been introduced. Congress, the courts, and the public have seen strong technical and legal arguments, impassioned pleas from victims, attempts at reason by the law enforcement community, name calling, proverbial mugs-thrown-across-the-room, uncontrollable profanity on media briefings, and just about any other form of pressure manifesting itself that one can imagine.

A Bomb on a Leash

On March 13, 2016 by Jonathan ZdziarskiThe idea of a controlled explosion comes to mind when I think about pending proceedings with Apple. The Department of Justice argues that a backdoored version of iOS can be controlled in that Apple’s existing security mechanisms can prevent it from blowing up any device other than Farook’s. This is quite true. The code signing and TSS signing mechanism used to install firmware have controls that can most certainly bind a firmware bundle to a given device UDID. What’s not true is the amount of real control and protection this provides.

Think of Apple’s signing mechanisms as a kind of “leash” if you will; they provide a means of digital rights management to control any payload delivered onto the device. Where the DOJ’s argument falls into error is that their focus is too much on this leash, and too little on the payload itself. The payload in this scenario is a modified version of iOS that has a direct line into a device’s security mechanisms to both disable them and manipulate them to rapidly brute force a passcode (remotely, mind you). It’s the electronic equivalent of an explosive for an iPhone that will blow the safe open (FBI’s analogy, not mine). What Apple is being forced to design, develop, test, validate, and protect is essentially a bomb on a leash.

Apple Should Own The Term “Warrant Proof”

On March 11, 2016 by Jonathan ZdziarskiThe Department of Justice, in a March 10 filing, accused Apple of outrightly making “warrant proof” devices, and accused Apple of obstruction of justice by making these devices so secure that they could not be searched, even with a warrant. While these words belonged to DOJ, I think Apple should own them. If you study our state laws, federal laws, and international treaties, you’ll see many examples of intellectual property that actually are protected against warrants. Yes, there are things in this country that are deemed warrant proof.

As per The State Department, Article 27.3 of the Vienna Convention on Diplomatic Relations states, a diplomatic pouch “shall not be opened or detained”. In other words, it’s warrant proof. No law enforcement agency in our country is permitted – under international treaty – to open a diplomatic pouch, and any warrants issued are null and void. Guidelines even permit for unaccompanied diplomatic pouches that are traveling without a diplomat or courier, which even further emphasizes the impetus for security of such pouches: they should have locks, and strong ones at that. Do we still have spying? Absolutely, and it’s illegal. It is not only reasonable then, but important to have a device like the iPhone – secure against illegal search and seizure.

An Example of “Warrant-Friendly Security”

On March 11, 2016 by Jonathan ZdziarskiThe encryption on the iPhone is clearly doing its job. Good encryption doesn’t discriminate between attackers, it simply protects data – that’s its job, and it’s frustrating both criminals and law enforcement. The government has recently made arguments insisting that we must find a “balance” between protecting your privacy and providing a method for law enforcement to procure evidence with a warrant. If we don’t, the Department of Justice and the President himself have made it clear that such privacy could easily be legislated out of our products. Some think having a law enforcement backdoor is a good idea. Here, I present an example of what “warrant friendly” security looks like. It already exists. Apple has been using it for some time. It’s integrated into iCloud’s design.

Unlike the desktop backups that your iPhone makes, which can be encrypted with a backup password, the backups sent to iCloud are not encrypted this way. They are absolutely encrypted, but differently, in a way that allows Apple to provide iCloud data to law enforcement with a subpoena. Apple had advertised iCloud as “encrypted” (which is true) and secure. It still does advertise this today, in fact, the same way it has for the past few years:

“Apple takes data security and the privacy of your personal information very seriously. iCloud is built with industry-standard security practices and employs strict policies to protect your data.”

So with all of this security, it sure sounds like your iCloud data should be secure, and also warrant friendly – on the surface, this sounds like a great “balance between privacy and security”. Then, the unthinkable happened.

On Dormant Cyber Pathogens and Unicorns

On March 3, 2016 by Jonathan ZdziarskiGary Fagan, the Chief Deputy District Attorney for San Bernardino County, filed an amicus brief to the court in defense of the FBI compelling Apple to backdoor Farook’s iPhone. In this brief, DA Michael Ramos made the outrageous statement that Farook’s phone might contain a “lying dormant cyber pathogen”, a term that doesn’t actually exist in computer science, let alone in information security.

CIS Files Amici Curiae Brief in Apple Case

On March 3, 2016 by Jonathan ZdziarskiCIS sought to file a friend-of-the-court, or “amici curiae,” brief in the case today. We submitted the brief on behalf of a group of experts in iPhone security and applied cryptography: Dino Dai Zovi, Charlie Miller, Bruce Schneier, Prof. Hovav Shacham, Prof. Dan Wallach, Jonathan Zdziarski, and our colleague in CIS’s Crypto Policy Project, Prof. Dan Boneh. CIS is grateful to them for offering up

Mistakes in the San Bernardino Case

On March 2, 2016 by Jonathan ZdziarskiMany sat before Congress yesterday and made their cases for and against a backdoor into the iPhone. Little was said, however, of the mistakes that led us here before Congress in the first place, and many inaccurate statements went unchallenged.

The most notable mistake the media has caught onto has been the blunder of changing the iCloud password on Farook’s account, and Comey acknowledged this mistake before Congress.

“As I understand from the experts, there was a mistake made in that 24 hours after the attack where the [San Bernardino] county at the FBI’s request took steps that made it hard—impossible—later to cause the phone to back up again to the iCloud,”

Comey’s statements appear to be consistent with court documents all suggesting that both Apple and the FBI believed the device would begin backing up to the cloud once it was connected to a known WiFi network. This essentially established that I nterference with evidence ultimately led to the destruction of the trusted relationship between the device and its iCloud account, which prevented evidence from being available. In other words, the mistake of trying to break into the safe caused the safe to lock down in a way that made it more difficult to get evidence out of it

Shoot First, Ask Siri Later

On February 26, 2016 by Jonathan ZdziarskiYou know the old saying, “shoot first, ask questions later”. It refers to the notion that careless law enforcement officers can often be short sighted in solving the problem at hand. It’s impossible to ask questions to a dead person, and if you need answers, that really makes it hard for you if you’ve just shot them. They’ve just blown their only chance of questioning the suspect by failing to take their training and good judgment into account. This same scenario applies to digital evidence. Many law enforcement agencies do not know how to properly handle digital evidence, and end up making mistakes that cause them to effectively kill their one shot of getting the answers they need.

In the case involving Farook’s iPhone, two things went wrong that could have resulted in evidence being lifted off the device.

First, changing the iCloud password prevented the device from being able to push an iCloud backup. As Apple’s engineers were walking FBI through the process of getting the device to start sending data again, it became apparent that the password had been changed (suggesting they may have even seen the device try to authorize on iCloud). If the backup had succeeded, there would be very little, if anything, that could have been gotten off the phone that wouldn’t be in the iCloud backup.

Secondly, and equally damaging to the evidence, was that the device was apparently either shut down or allowed to drain after it was seized. Shutting the device down is a common – but outdated – practice in field operations. Modern device seizure not only requires that the device should be kept powered up, but also to tune all of the protocols leading up to the search and seizure so that it’s done quickly enough to prevent the battery from draining before you even arrive on scene. Letting the device power down effectively shot the suspect dead by removing any chances of doing the following:

Apple’s Burden to Protect or Perpetually Create a “Weapon”

On February 25, 2016 by Jonathan ZdziarskiAs the Apple/FBI dispute continues on, court documents reveal the argument that Apple has been providing forensic services to law enforcement for years without tools being hacked or leaked from Apple. Quite the contrary, information is leaked out of Foxconn all the time, and in fact some of the software and hardware tools used to hack iOS products over the past several years (IP-BOX, Pangu, and so on) have originated in China, where Apple’s manufacturing process takes place. Outside of China, jailbreak after jailbreak has taken advantage of vulnerabilities in iOS, some with the help of tools leaked out of Apple’s HQ in Cupertino. Devices have continually been compromised and even today, Apple’s security response team releases dozens of fixes for vulnerabilities that have been exploited outside of Apple. Setting all of this aside for a moment, however, lets take a look at the more immediate dangers of such statements.

By affirming that Apple can and will protect such a backdoor, Comey’s statement is admitting that Apple will be faced with not only the burden of breathing this forensics backdoor into existence, but must also take perpetual steps to protect it once it’s been created. In other words, the courts are forcing Apple to create what would be considered a weapon under the latest proposed Wassenaar rules, and charging them with the burden of also preventing that weapon from getting out – either the code itself, or the weaknesses that Apple would have to continue allowing to be baked into their products to allow the weapon to work.

On Ribbons and Ribbon Cutters

On February 23, 2016 by Jonathan ZdziarskiWith most non-technical people struggling to make sense of the battle between FBI and Apple, Bill Gates introduced an excellent analogy to explain cryptography to the average non-geek. Gates used the analogy of encryption as a “ribbon around a hard drive”. Good encryption is more like a chastity belt, but since Farook decided to use a weak passcode, I think it’s fair here to call it a ribbon. In any case, lets go with Gates’ ribbon analogy.

Where Gates is wrong is that the courts are not ordering Apple to simply cut the ribbon. In fact, I think there would be more in the tech sector who would support Apple simply breaking the weak password that Farook chose to use if this had been the case. Apple’s encryption is virtually unbreakable when you use a strong alphanumeric passcode, and so by choosing to use a numeric pin, you get what you deserve.

Instead of cutting the ribbon, which would be a much simpler task, the courts are ordering Apple to invent a ribbon cutter – a forensic tool capable of cutting the ribbon for FBI, and is promising to use it on just this one phone. In reality, there’s already a line beginning to form behind Comey should he get his way. NY DA Cy Vance has stated that NYC has 175 iPhones waiting to be unlocked (which translates to roughly 1/10th of 1% of all crime in NYC for an entire year). Documents have also shown DOJ has over a dozen more such requests pending. If the promise of “just this one phone” were authentic, there would be no need to order Apple to make this ribbon cutter; they’d simply tell them to cut the ribbon.